A Windows Server Failover Cluster (WSFC) is a group of independent servers collaborating to enhance the availability and reliability of applications and services. If you are an IT admin or an aspirant, you should know how to configure it. So, in this post, we will discuss how to install and configure Failover Cluster in Windows Server.

Failover Cluster holds utmost importance in a production environment. If you have configured WSFC in the environment, and for some reason, a node goes down, there will be a backup node ready to take up the load. So, let’s say we have a small environment containing a few nodes, if Node 1 goes down, the failover clustering will detect, and then change the state of Node 2 from passive to active.

If you want to install and configure the Failover Cluster in Windows Server, follow the steps below.

- Install Failover Cluster Feature

- Install File and Storage Service on the Storage Server

- Enable iSCSI Initiator

- Configure the Storage Server

- Configure Failover Cluster

Let us talk about them in detail.

1] Install Failover Cluster Feature

First of all, we need to install the Failover Cluster feature on every single node attached to your domain controller. If you have a way to deploy this feature to all the connected nodes, we recommend you use it, but if you don’t have a really large network, installing manually will not take a lot of time. To do so, follow the steps mentioned below.

- Open the Server Manager.

- Now, click on Add roles and features.

- Click on Next, make sure to select Role-based or feature-based installation, and click on Next.

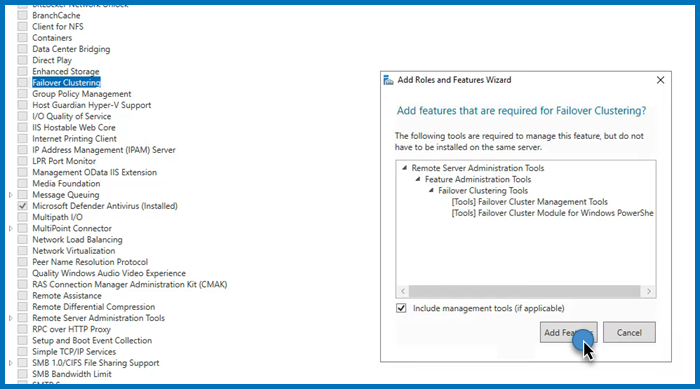

- Now, keep clicking on Next until you reach the Features tab, look for the Failover cluster, and tick it.

- A pop will appear asking you to click on Add features, do that, and follow the on-screen instructions to complete the installation process.

As mentioned, you must install this feature on all the nodes you want to be part of the failover cluster environment.

2] Install File and Storage Services on the Storage Server

Next, we need to configure the storage that both these servers will be using. That storage server may or may not be a member of the domain, as everything is IP-based. Follow the steps mentioned below to install the File and Storage services for them.

- Open Server Manager.

- Go to Add roles and features.

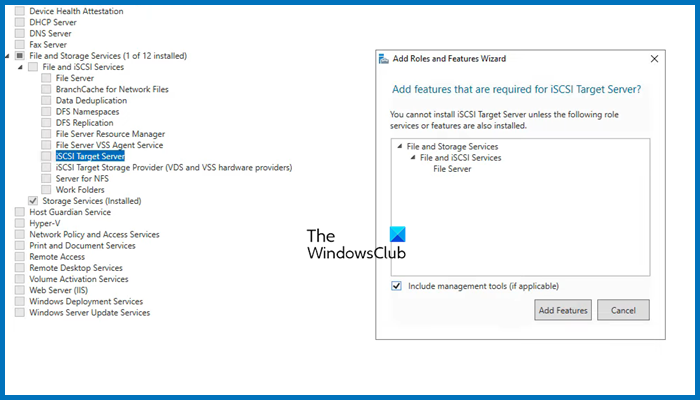

- Click on Next until you reach the Server Roles tab, expand File and Storage services, look for iSCSI Target Server, tick it, and then and then install it.

Wait for it to complete the installation process. Since we will have only one storage server, you need to install it on a single computer.

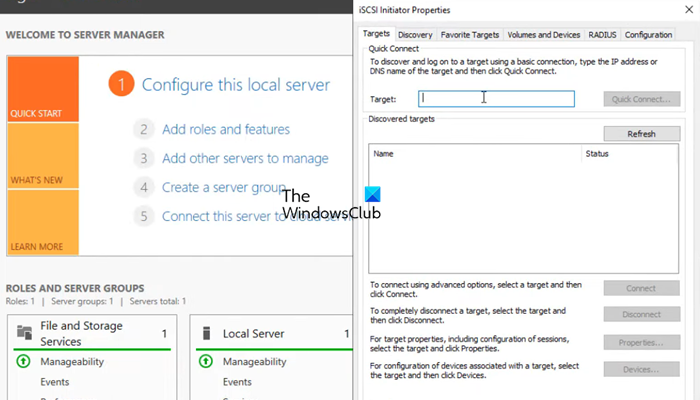

3] Enable iSCSI Intiator

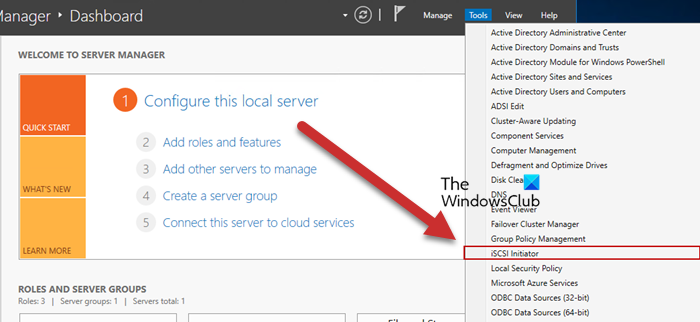

Now, we need to go back to the Failover Cluster nodes and then enable ISCSI Initiator. To do so, click on Tools > iSCSI Initiator in the node server, and then click Yes when prompted to enable the feature. You have to do this to all the servers attached to the node.

Read: How to install and use iSCSI Target to configure Storage Server

4] Configure the Storage Server

We enabled iSCSI Initiator on the node servers to make them accessible to the storage server. Now that we are done with that part, let’s add the nodes to the Storage Server. Follow the steps mentioned below to do so.

- Go to Server Manager > File and Storage Services.

- Click on iSCSI tab.

- Click on Tasks > New iSCSI Virtual disk.

- We can either select a hard drive or just a folder in the server, to do so, click on Type a custom path, then click on Browse, and select either the volume, an existing folder, or create a new folder.

- Click on Next, the virtual disk name, and click on Next.

- Select the size of the disk; Fixed is quicker but Dynamic gives you the flexibility of increasing the size when needed.

- Click on Next, give the target a name, and click on Next.

- When you reach the Access Server tab, click on Add.

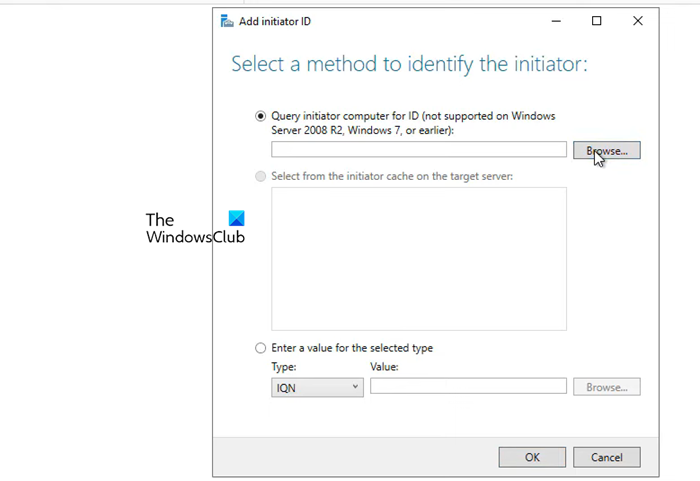

- Make sure that the Query initiator computer for ID option is checked and click on Browse.

- Enter the name of the node computer and then click on Check names. Add all the nodes of your environment similarly.

- Click on Next.

- Enable CHAP if you want to add authentication between devices.

- Finally, create the connection.

This will create a storage environment consisting of the two nodes.

5] Connect nodes from the initiator back to the target

After configuring the storage environment, we can set the target for the initiator. Follow the steps mentioned below to do the same.

- Open Server Manager on the node computer.

- Go to Tools > iSCSI Initiator.

- In the Target field, enter the IP address of the iSCSI Target.

- Click on Quick connect > Ok.

You can go to the Discover tab to see the connection, then go to Volumes and Devices, and see if you can find the volume under the Volume list, if it’s not there, click on Auto Configure.

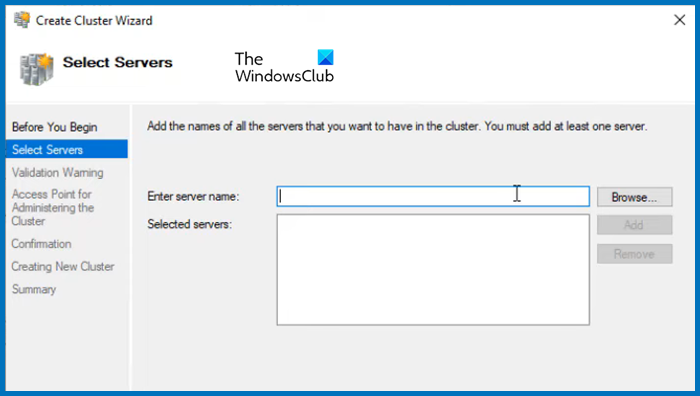

6] Configure Failover Cluster

Now that we have storage ready, we can just create a cluster and add the two nodes. Follow the steps mentioned below to do the same.

- Open the Server Manager.

- Click on Tools > Failover Cluster Manager.

- This will launch the Failover Cluster Manager utility, so, right-click on the Failover Cluster Manager tab, and click on Create Cluster.

- In the Create cluster wizard, click on Next.

- In the Select Server tab, enter the name of the server and click on Add. You can also browse if you want.

- Run the validation test, once done, click on Next.

- Give the cluster a name and an IP address not currently used. Click Next.

- Finally, click on Next and wait for the cluster creation to complete.

Then, in the upper left-hand corner, you will see that the cluster has been created. To access it, just click on it. Now, you can add roles and storage and make all the required configurations to the cluster.

That’s it!

Read: How to install and configure DNS on Windows Server

How to install Failover Cluster in Windows?

You need to use the Server Manager to install the failover cluster feature in Windows Server. In the Server Manager, go to Add roles and features, and then install the Failover Cluster from the Features tab. For more details, check out the guide above.

Read: How to set up an FTP Server on Windows 11

How to configure Failover Cluster in Windows Server?

To configure failover cluster in Windows Server, you need first install the Failover Cluster feature, configure Storage, create a cluster, and then add the servers. To know more, check out the guide mentioned in this post.

Also Read: Best free Encrypted Cloud Storage Services.

Windows Server Failover Clustering (WSFC) — a feature of Microsoft Windows Server operating system for fault tolerance and high availability (HA) of applications and services — enables several computers to host a service, and if one has a fault, the remaining computers automatically take over the hosting of the service. It is included with Windows Server 2022, Windows Server 2019, Windows Server 2016 and Azure Stack HCI.

In WSFC, each individual server is called a node. The nodes can be physical computers or virtual machines, and are connected through physical connections and through software. Two or more nodes are combined to form a cluster, which hosts the service. The cluster and nodes are constantly monitored for faults. If a fault is detected, the nodes with issues are removed from the cluster and the services may be restarted or moved to another node.

Capabilities of Windows Server Failover Clustering (WSFC)

Windows Server Failover Cluster performs several functions, including:

- Unified cluster management. The configuration of the cluster and service is stored on each node within the cluster. Changes to the configuration of the service or cluster are automatically sent to each node. This allows for a single update to change the configuration on all participating nodes.

- Resource management. Each node in the cluster may have access to resources such as networking and storage. These resources can be shared by the hosted application to increase the cluster performance beyond what a single node can accomplish. The application can be configured to have startup dependencies on these resources. The nodes can work together to ensure resource consistency.

- Health monitoring. The health of each node and the overall cluster is monitored. Each node uses heartbeat and service notifications to determine health. The cluster health is voted on by the quorum of participating nodes.

- Automatic and manual failover. Resources have a primary node and one or more secondary nodes. If the primary node fails a health check or is manually triggered, ownership and use of the resource is transferred to the secondary node. Nodes and the hosted application are notified of the failover. This provides fault tolerance and allows rolling updates not to affect overall service health.

Common applications that use WSFC

A number of different applications can use WSFC, including:

- Database Server

- Windows Distributed File System (NFS) Namespace Server

- File Server

- Hyper-V

- Microsoft Exchange Server

- Microsoft SQL Server

- Namespace Server

- Windows Internet Name Server

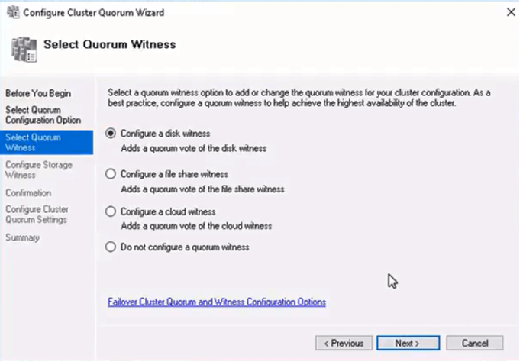

WSFC voting, quorum and witnesses

Every cluster network must account for the possibility of individual nodes losing communication to the cluster but still being able to serve requests or access resources. If this were to happen, the service could become corrupt and serve bad responses or cause data stores to become out of sync. This is known as split-brain condition.

WSFC uses a voting system with quorum to determine failover and to prevent a split-brain condition. In the cluster, the quorum is defined as half of the total nodes. After a fault, the nodes vote to stay online. If less than the quorum amount votes yes, those nodes are removed. For example, a cluster of five nodes has a fault, causing three to stay in communication in one segment and two in the other. The group of three will have the quorum and stay online, while the other two will not have a quorum and will go offline.

In small clusters, an extra witness vote should be added. The witness is an extra vote that is added as a tiebreaker in clusters with even numbers of nodes. Without a witness, if half of the nodes go offline at one time the whole service is stopped. A witness is required in clusters with only two nodes and recommended for three and four node clusters. In clusters of five or more nodes, a witness does not provide benefits and is not needed. The witness information is stored in a witness.log file. It can be hosted as a File Share Witness, an Azure Cloud Witness or as a Disk Witness (aka custom quorum disk).

A Dynamic Quorum allows the number of votes to constitute a quorum to adjust as faults occur. This way, as long as more than half of the nodes don’t go offline at one time, the cluster will be able to continuously lose nodes without it going offline. This allows for a single node to run the services as the «last man standing.»

Windows Server Failover Clustering and Microsoft SQL Server Always On

SQL Server Always On is a high-availability and disaster recovery product for Microsoft SQL server that takes advantage of WSFC. SQL Server Always On has two configurations that can be used separately or in tandem. Failover Cluster Instance (FCI) is a SQL Server instance that is installed across several nodes in a WSFC. Availability Group (AG) is a one or more databases that fail over together to replicated copies. Both register components with WSFC as cluster resources.

Windows Server Failover Clustering Setup Steps

See Microsoft for full documentation on how to deploy a failover cluster using WSFC.

- Verify prerequisites

- All nodes on same Windows Server version

- All nodes using supported hardware

- All nodes are members of the same Active Directory domain

- Install the Failover Clustering feature using Windows Server Manager add Roles and Features

- Validate the failover cluster configuration

- Create the failover cluster in server manager

- Create the cluster roles and services using Microsoft Failover Cluster Manager (MSFCM)

See failover cluster quorum considerations for Windows admins, 10 top tips to maximize hyper-converged infrastructure benefits and how to build a Hyper-V home lab in Windows Server 2019.

This was last updated in March 2022

Continue Reading About Windows Server Failover Clustering (WSFC)

- How does a Hyper-V failover cluster work behind the scenes?

- Manage Windows Server HCI with Windows Admin Center

- Guest clustering achieves high availability at the VM level

- 5 skills every Hyper-V administrator needs to succeed

- How does a Hyper-V failover cluster work behind the scenes?

Dig Deeper on IT operations and infrastructure management

-

Windows file share witness (FSW)

By: Nick Martin

-

Microsoft Exchange Server

By: Nick Barney

-

Microsoft Cloud Witness

By: Katie Terrell Hanna

-

failover cluster

By: Rahul Awati

Problem

I want to upgrade and migrate my SQL Server failover clusters to SQL Server 2022 running on Windows Server 2022. What is the process for building a Windows Server 2022 Failover Cluster step by step for SQL Server 2022?

Solution

Windows Server 2022, Microsoft’s latest version of its server operating system, has been branded as a “cloud ready” operating system. There are many features introduced in this version that make working with Microsoft Azure a seamless experience, specifically the Azure Extended Network feature. For decades, Windows Server Failover Clustering (WSFC) has become the platform of choice for providing high availability for SQL Server workloads – both for failover clustered instances and Availability Groups.

Listed below are two Windows Server 2022 failover clustering features that I feel are relevant to SQL Server. This is in addition to the features introduced in previous versions of Windows Server. And while there are many features added to Windows Server 2022 Failover Clustering, not all of them are designed with SQL Server in mind.

Clustering Affinity and AntiAffinity

Affinity is a failover clustering rule that establishes a relationship between two or more roles (or resource groups) to keep them together in the same node. AntiAffinity is the opposite, a rule that would keep two or more roles/resource groups in different nodes. While this mostly applies to virtual machines running on a WSFC, particularly VM guest clusters, this has been a long-awaited feature request for SQL Server failover clustered instances (FCI) that take advantage of the Distributed Transaction Coordinator (DTC). In the past, workarounds such as a SQL Server Agent job that moves the clustered DTC in the same node as the FCI were implemented to achieve this goal. Now, it’s a built-in feature.

AutoSites

This feature takes advantage of Active Directory sites and applies to multi-data center, stretched WSFCs. When configuring a WSFC, the setup process will check if an Active Directory site exists for the IP-subnet that the nodes belong to. If there is an existing Active Directory site, the WSFC will automatically create site fault domains and assign the nodes accordingly. If no Active Directory sites exist, the IP-subnets will be evaluated, and, similar to when Active Directory sites exist, site fault domains will be created based on the IP-subnet. While this is a great feature for local high availability and disaster recovery before SQL Server 2016, I no longer recommend a stretched WSFC for SQL Server. There are too many external dependencies that can impact local high availability.

In this series of tips, you will install a SQL Server 2022 failover clustered instance on a Windows Server 2022 failover cluster the traditional way – with Active Directory-joined servers and shared storage. Configuring TCP/IP and joining the servers to your Active Directory domain is beyond the scope of this tip. Consult your systems administrators on how to perform these tasks. It is assumed that the servers that you will add to the WSFC are already joined to an Active Directory domain and that the domain user account that you will use to perform the installation and configuration has local Administrative privileges on all the servers.

Similar to the previous tip, you need to provision your shared storage depending on your requirement. Talk to your storage administrator regarding storage allocation for your SQL Server failover clustered instances. This tip assumes that the underlying shared storage has already been physically attached to all the WSFC nodes and that the hardware meets the requirements defined in the Failover Clustering Hardware Requirements and Storage Options. Managing shared storage requires an understanding of your specific storage product, which is outside the scope of this tip. Consult your storage vendor for more information.

The environment used in this tip is configured with four (4) iSCSI shared storage volumes – SQL_DISK_R, SQL_DISK_S, and SQL_DISK_T allocated for the SQL Server databases and WITNESS for the witness disk.

NOTE: Don’t be alarmed if the WITNESS disk does not have a drive letter. It is not necessary. This frees up another drive letter for use with volumes dedicated to SQL Server databases. It also prevents you from messing around with it. I mean, you won’t be able to do anything with it if you cannot see it in Windows Explorer.

The goal here is to provide shared storage both for capacity and performance. Perform the necessary storage stress tests to make sure that you are getting the appropriate amount of IOPS as promised by your storage vendor. You can use the DiskSpd utility for this purpose.

Adding the Failover Clustering Feature

Before you can create a failover cluster, you must install the Failover Clustering feature on all servers that you want to join in the WSFC. The Failover Clustering feature is not enabled by default.

Add the Failover Clustering feature using the following steps.

Step 1

Open the Server Manager Dashboard and click the Add roles and features link. This will run the Add Roles and Features Wizard.

Step 2

Click through the different dialog boxes until you reach the Select features dialog box. In the Select features dialog box, select the Failover Clustering checkbox.

When prompted with the Add features that are required for Failover Clustering dialog box, click Add Features. Click Next.

Step 3

In the Confirm installation selections dialog box, click Install to confirm the selection and proceed to do the installation. You may need to reboot the server after adding this feature.

Alternatively, you can run the PowerShell command below using the Install-WindowsFeature PowerShell cmdlet to install the Failover Clustering feature.

Install-WindowsFeature -Name Failover-Clustering -IncludeManagementToolsNOTE: Perform these steps on all the servers you intend to join in your WSFC before proceeding to the next section.

Running the Failover Cluster Validation Wizard

Next, you need to run the Failover Cluster Validation Wizard from the Failover Cluster Management console. You can launch the tool from the Server Manager dashboard, under Tools, and select Failover Cluster Manager.

NOTE: These steps can be performed on any servers that will serve as nodes in your WSFC.

Step 1

In the Failover Cluster Management console, under the Management section, click the Validate Configuration link. This will run the Validate a Configuration Wizard.

Step 2

In the Select Servers or a Cluster dialog box, enter the hostnames of the servers that you want to add as nodes in your WSFC. Click Next.

Step 3

In the Testing Options dialog box, accept the default option, Run all tests (recommended), and click Next. This will run all the necessary tests to validate whether the nodes are OK for the WSFC.

Step 4

In the Confirmation dialog box, click Next. This will run all the necessary validation tests.

Step 5

In the Summary dialog box, verify that all the selected checks return successful results. Click the View Report button to open the Failover Cluster Validation Report.

A note on the results: The icons in the Summary dialog box can be confusing. In the past, the Cluster Validation Wizard may report Warning messages pertaining to network and disk configuration issues, missing security updates, incompatible drivers, etc. The general recommendation has always been to resolve all errors and issues that the Cluster Validation Wizard reports before proceeding with the next steps. And it still is.

With Windows Server 2016 and later, the Cluster Validation Wizard checks for Storage Spaces Direct. Despite choosing the Run all tests (recommended) option, the Cluster Validation Wizard will exclude those checks.

Hence, why you will get a Warning message in the cluster validation report despite having all selected default checks return successful results. Having both the Warning (yellow triangle with an exclamation mark) and Successful (green check mark) icons in the same result is confusing indeed. It is what it is.

Another issue that you might encounter involves the warnings regarding signed drivers and software update levels.

You can resolve the warning regarding signed drivers for the Microsoft Remote Display Adapter using the latest Windows Server 2022 installation media or downloading the latest updates from the Windows Update Center. Using the Windows Update Center to download the latest updates also resolves the warning on the software update levels. This assumes that your servers can access the internet. Work with your network administrators on enabling internet access to your servers.

Step 6

To create the WSFC using the servers you’ve just validated, select the Create the cluster now using the validated nodes… checkbox and click Finish.

Alternatively, run the PowerShell command below using the Test-Cluster PowerShell cmdlet to run Failover Cluster Validation.

Test-Cluster -Node TDPRD081, TDPRD082Creating the Windows Server 2022 Failover Cluster (WSFC)

After validating the servers, create the WSFC using the Failover Cluster Manager console. You can launch the tool from the Server Manager dashboard, under Tools, and select Failover Cluster Manager. Alternatively, you can run the Create Cluster Wizard immediately after running the Failover Cluster Validation Wizard. Be sure to check the Create the cluster now using the validated nodes… checkbox.

NOTE: Perform these steps on any servers that will serve as nodes in your WSFC.

Step 1

Within the Failover Cluster Manager console, under the Management section, click the Create Cluster… link. This will run the Create Cluster Wizard.

Step 2

In the Select Servers dialog box, enter the hostnames of the servers that you want to add as nodes of your WSFC. Click Next.

Step 3

In the Access Point for Administering the Cluster dialog box, enter the virtual hostname and IP address you will use to administer the WSFC. Click Next. Note that because the servers are within the same network subnet, only one virtual IP address is needed. This is a typical configuration for local high availability.

Step 4

In the Confirmation dialog box, click Next. This will configure Failover Clustering on both servers that will act as nodes in your WSFC, add the configured shared storage, and add Active Directory and DNS entries for the WSFC virtual server name.

A word of caution before proceeding: Before clicking Next, be sure to coordinate with your Active Directory domain administrators on the appropriate permissions you need to create the computer name object in Active Directory. It will save you a lot of time and headaches troubleshooting if you cannot create a WSFC. Local Administrator permission on the servers that you will use as nodes in your WSFC is not enough. Your Active Directory domain account needs the following permissions in the Computers Organizational Unit. By default, this is where the computer name object that represents the virtual hostname for your WSFC will be created.

- Create Computer objects

- Read All Properties

For additional information, refer to Configuring cluster accounts in Active Directory.

In a more restrictive environment where your Active Directory domain administrators are not allowed to grant you those permissions, you can request them to pre-stage the computer name object in Active Directory. Provide the Steps for prestaging the cluster name account documentation to your Active Directory domain administrators.

Step 5

In the Summary dialog box, verify that the report returns successful results. Click Finish.

Step 6

Verify that the quorum configuration is using Node and Disk Majority – Witness: Cluster Disk n, using the appropriate drive that you configured as the witness disk.

Alternatively, you can run the PowerShell command below using the New-Cluster PowerShell cmdlet to create a new WSFC.

New-Cluster -Name TDPRD080 -Node TDPRD081, TDPRD082 -StaticAddress 172.16.0.80As a best practice, you should rename your shared storage resources before installing SQL Server 2022. This makes it easy to identify what the disks are used for – data, log, backups, etc. – during the installation and later when troubleshooting availability issues. And while you may have renamed the disks using the Disk Management console, you still have to rename them from the point-of-view of the WSFC. The default names of the shared storage will be Cluster Disk n, where n is the number assigned to the disks.

Step 1

Within the Failover Cluster Manager console, under the Storage navigation option, select Disks. This will display all of the shared storage resources added to the WSFC.

Step 2

Right-click one of the shared disks and select Properties. This will open the Properties page for that specific disk.

Step 3

In the Properties page, on the General tab, type the appropriate name for the shared disk in the Name textbox. Click OK.

Do this on all of the shared storage resources available on your WSFC. Make sure the names of the shared disks in the WSFC reflect those you assigned using the Disk Management console.

PowerShell Alternative

Alternatively, you can use the PowerShell script below to identify the clustered disks and their corresponding disk properties. The DiskGuid property of the cluster disk is used to identify the Path property of the physical disk. The Path property of the physical disk is used to identify the DiskPath and DriveLetter properties of the logical partition. The DriveLetter property of the logical partition is used to identify the FileSystemLabel property of the disk volume.

ForEach ($a in (Get-ClusterResource | Where {$_.ResourceType -eq "Physical Disk"} | Get-ClusterParameter -Name DiskGuid))

{

$ClusterDiskGuid=$a.Value.ToString()

$Disk=Get-Disk | where {$_.Path -like "*$ClusterDiskGuid"} | Select DiskNumber, Path

$Partition=Get-Partition | where {$_.DiskPath -like $Disk.Path} | Select DriveLetter, DiskPath

$Volume=Get-Volume | where {$_.DriveLetter -eq $Partition.DriveLetter} | Select FileSystemLabel

"Cluster Disk Name: " + $a.ClusterObject + " , Disk Number: " + $Disk.DiskNumber + " , Drive Letter: " + $Partition.DriveLetter + " , Volume Label: " + $Volume.FileSystemLabel

}

Once you’ve mapped the cluster disks with the corresponding physical disks, you can rename them accordingly using the sample PowerShell commands below, replacing the appropriate values. Note that the disk without the drive letter is the witness disk. Make sure that it is also renamed accordingly.

(Get-ClusterResource -Name "Cluster Disk 1").Name = "SQL_DISK_T"

(Get-ClusterResource -Name "Cluster Disk 2").Name = "WITNESS"

(Get-ClusterResource -Name "Cluster Disk 3").Name = "SQL_DISK_R"

(Get-ClusterResource -Name "Cluster Disk 4").Name = "SQL_DISK_S"Renaming Cluster Network Resources

Similarly, you should rename your cluster network resources before installing SQL Server 2022. And while you may have renamed the network adapters using the Network Connections management console, you still have to rename them from the point-of-view of the WSFC. The default names of the cluster network resources will be Cluster Network n, where n is the number assigned to the cluster network adapter.

Step 1

Within the Failover Cluster Manager console, select the Networks navigation option. This will display all the cluster network resources added to the WSFC.

Step 2

Right-click one of the cluster network adapters and select Properties. This will open the Properties page for that specific cluster network resource.

Step 3

In the Properties page, type the appropriate name for the cluster network resource in the Name textbox. Click OK.

NOTE: The WSFC will automatically detect whether client applications can connect through the specific cluster network resource. This is determined by whether a network adapter has a default gateway and can be identified via network discovery. Thus, it is important to get your network administrators involved in properly assigning the IP address, the subnet mask, and the default gateway values of all the network adapters used on the WSFC nodes before creating the WSFC. An example of this is the network adapter configured for inter-node communication.

Other network adapters also need to be appropriately configured. The network adapter used for iSCSI in the example cluster has the Do not allow cluster network communication on this network option selected.

Update Cluster Network Resources

Do this on all the cluster network resources available on your WSFC.

All available network adapters will be used for inter-node communication, including the network adapter you configure for production network traffic. How the WSFC prioritizes which network adapter is used for private/heartbeat communication traffic is determined by using the cluster network adapter’s Metric property value. You can identify the cluster network adapter’s Metric property value by running the PowerShell command below.

Get-ClusterNetwork | Sort Metric

The cluster network adapter with the lowest Metric property value will be used for private/heartbeat communication (and cluster shared volume if configured). In the example provided, the Heartbeat cluster network adapter will be used for inter-node communication. And since the LAN cluster network adapter has a Role property value of ClusterAndClient, the WSFC will use it if the Heartbeat cluster network adapter becomes unavailable. This is described in more detail in the Configuring Network Prioritization on a Failover Cluster blog post from Microsoft.

PowerShell Alternative

Alternatively, you can use the sample PowerShell script below to identify the clustered network resources and rename them accordingly.

#Display all cluster network resources

Get-ClusterNetworkInterface

#Rename cluster network resources accordingly based on the results of Get-ClusterNetworkInterface

(Get-ClusterNetwork –Name "Cluster Network 1").Name = "LAN"

(Get-ClusterNetwork –Name "Cluster Network 2").Name = "Heartbeat"

(Get-ClusterNetwork –Name "Cluster Network 3").Name = "iSCSI1"

(Get-ClusterNetwork –Name "Cluster Network 4").Name = "iSCSI2"

Congratulations! You now have a working Windows Server 2022 failover cluster. Proceed to validate whether your WSFC is working or not. A simple test to do would be a continuous PING test on the virtual hostname or IP address that you have assigned to your WSFC. Reboot one of the nodes and see how your PING test responds. At this point, you are now ready to install SQL Server 2022.

Summary

In this tip, you’ve:

- Seen some of the new Windows Server 2022 failover clustering features that are relevant to SQL Server workloads,

- Had an idea of how to provision shared storage for your WSFC,

- Added the Failover Clustering feature on all the servers that you intend to join in a WSFC,

- Ran the Failover Cluster Validation Wizard and resolved potential issues before creating the failover cluster,

- Seen how the validation checks for Storage Spaces Direct that can cause a Warning message when running the Failover Cluster Validation Wizard,

- Created the WSFC,

- Renamed the shared storage resources,

- Renamed the cluster network resources, and

- Viewed network prioritization for cluster network adapters.

In the next tip in this series, you will go through the process of installing a SQL Server 2022 failover clustered instance on your WSFC.

Next Steps

- Review the previous tips on Install SQL Server 2008 on a Windows Server 2008 Cluster Part 1, Part 2, Part 3, and Part 4 to see the difference in the setup experience between a SQL Server 2008 FCI on Windows Server 2008 and a SQL Server 2022 FCI on Windows Server 2022.

- Read more on the following topics:

- Installing, Configuring and Managing Windows Server Failover Cluster using PowerShell Part 1

- Validating a Windows Cluster Prior to Installing SQL Server 2014

- New features of Windows Server 2022 Failover Clustering

Provide feedback

Saved searches

Use saved searches to filter your results more quickly

Sign up

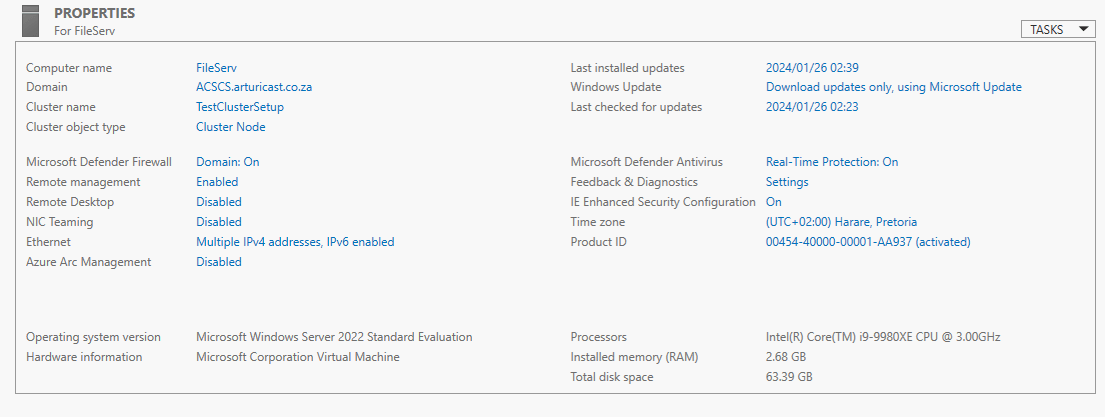

Here is the FileServ.

And the Local Server Properties for FileServ

Now we have a fully witnessed WSFC set up, any additional setup (e.g. FCI, HADR, BADR) is much simpler.

One last thing – Setting up Cluster-Aware Updating

Now that WSFC has been set up, we need to carefully consider how to apply updates. Do not just update haphazardly. Care needs to be exercised. To this end, once the Clustering Management tool has been installed, Cluster-Aware Updating should be applied.

Feature description

Cluster-Aware Updating is an automated feature that enables you to update servers in a failover cluster with little or no loss in availability during the update process. During an Updating Run, Cluster-Aware Updating transparently performs the following tasks:

- Puts each node of the cluster into node maintenance mode.

- Moves the clustered roles off the node.

- Installs the updates and any dependent updates.

- Performs a restart if necessary.

- Brings the node out of maintenance mode.

- Restores the clustered roles on the node.

- Moves to update the next node.

For many clustered roles in the cluster, the automatic update process triggers a planned failover. This can cause a transient service interruption for connected clients. However, in the case of continuously available workloads, such as Hyper-V with live migration or file server with SMB Transparent Failover, Cluster-Aware Updating can coordinate cluster updates with no impact to the service availability.

Practical applications

- CAU reduces service outages in clustered services, reduces the need for manual updating workarounds, and makes the end-to-end cluster updating process more reliable for the administrator. When the CAU feature is used in conjunction with continuously available cluster workloads, such as continuously available file servers (file server workload with SMB Transparent Failover) or Hyper-V, the cluster updates can be performed with zero impact to service availability for clients.

- CAU facilitates the adoption of consistent IT processes across the enterprise. Updating Run Profiles can be created for different classes of failover clusters and then managed centrally on a file share to ensure that CAU deployments throughout the IT organization apply updates consistently, even if the clusters are managed by different lines-of-business or administrators.

- CAU can schedule Updating Runs on regular daily, weekly, or monthly intervals to help coordinate cluster updates with other IT management processes.

- CAU provides an extensible architecture to update the cluster software inventory in a cluster-aware fashion. This can be used by publishers to coordinate the installation of software updates that are not published to Windows Update or Microsoft Update or that are not available from Microsoft, for example, updates for non-Microsoft device drivers.

- CAU self-updating mode enables a «cluster in a box» appliance (a set of clustered physical machines, typically packaged in one chassis) to update itself. Typically, such appliances are deployed in branch offices with minimal local IT support to manage the clusters. Self-updating mode offers great value in these deployment scenarios.

Important functionality

The following is a description of important Cluster-Aware Updating functionality:

- A user interface (UI) — the Cluster Aware Updating window — and a set of cmdlets that you can use to preview, apply, monitor, and report on the updates

- An end-to-end automation of the cluster-updating operation (an Updating Run), orchestrated by one or more Update Coordinator computers

- A default plug-in that integrates with the existing Windows Update Agent (WUA) and Windows Server Update Services (WSUS) infrastructure in Windows Server to apply important Microsoft updates

- A second plug-in that can be used to apply Microsoft hotfixes, and that can be customized to apply non-Microsoft updates

- Updating Run Profiles that you configure with settings for Updating Run options, such as the maximum number of times that the update will be retried per node. Updating Run Profiles enable you to rapidly reuse the same settings across Updating Runs and easily share the update settings with other failover clusters.

- An extensible architecture that supports new plug-in development to coordinate other node-updating tools across the cluster, such as custom software installers, BIOS updating tools, and network adapter or host bus adapter (HBA) updating tools.

Cluster-Aware Updating can coordinate the complete cluster updating operation in two modes:

- Self-updating mode For this mode, the CAU clustered role is configured as a workload on the failover cluster that is to be updated, and an associated update schedule is defined. The cluster updates itself at scheduled times by using a default or custom Updating Run profile. During the Updating Run, the CAU Update Coordinator process starts on the node that currently owns the CAU clustered role, and the process sequentially performs updates on each cluster node. To update the current cluster node, the CAU clustered role fails over to another cluster node, and a new Update Coordinator process on that node assumes control of the Updating Run. In self-updating mode, CAU can update the failover cluster by using a fully automated, end-to-end updating process. An administrator can also trigger updates on-demand in this mode, or simply use the remote-updating approach if desired. In self-updating mode, an administrator can get summary information about an Updating Run in progress by connecting to the cluster and running the Get-CauRun Windows PowerShell cmdlet.

- Remote-updating mode For this mode, a remote computer, which is called an Update Coordinator, is configured with the CAU tools. The Update Coordinator is not a member of the cluster that is updated during the Updating Run. From the remote computer, the administrator triggers an on-demand Updating Run by using a default or custom Updating Run profile. Remote-updating mode is useful for monitoring real-time progress during the Updating Run, and for clusters that are running on Server Core installations.

Configure the nodes for remote management

To use Cluster-Aware Updating, all nodes of the cluster must be configured for remote management. By default, the only task you must perform to configure the nodes for remote management is to Enable a firewall rule to allow automatic restarts. The following table lists the complete remote management requirements, in case your environment diverges from the defaults. These requirements are in addition to the installation requirements for the Install the Failover Clustering feature and the Failover Clustering Tools and the general clustering requirements that are described in previous sections in this topic.

| Requirement | Default state | Self-updating mode | Remote-updating mode |

| Enable a firewall rule to allow automatic restarts | Disabled | Required on all cluster nodes if a firewall is in use | Required on all cluster nodes if a firewall is in use |

| Enable Windows Management Instrumentation | Enabled | Required on all cluster nodes | Required on all cluster nodes |

| Enable Windows PowerShell 3.0 or 4.0 and Windows PowerShell remoting | Enabled | Required on all cluster nodes | Required on all cluster nodes to run the following:

— The Save-CauDebugTrace cmdlet — PowerShell pre-update and post-update scripts during an Updating Run — Tests of cluster updating readiness using the Cluster-Aware Updating window or the Test-CauSetup Windows PowerShell cmdlet |

| Install .NET Framework 4.6 or 4.5 | Enabled | Required on all cluster nodes | Required on all cluster nodes to run the following:

— The Save-CauDebugTrace cmdlet — PowerShell pre-update and post-update scripts during an Updating Run — Tests of cluster updating readiness using the Cluster-Aware Updating window or the Test-CauSetup Windows PowerShell cmdlet |

Enable a firewall rule to allow automatic restarts

To allow automatic restarts after updates are applied (if the installation of an update requires a restart), if Windows Firewall or a non-Microsoft firewall is in use on the cluster nodes, a firewall rule must be enabled on each node that allows the following traffic:

- Protocol: TCP

- Direction: inbound

- Program: wininit.exe

- Ports: RPC Dynamic Ports

- Profile: Domain

If Windows Firewall is used on the cluster nodes, you can do this by enabling the Remote Shutdown Windows Firewall rule group on each cluster node. When you use the Cluster-Aware Updating window to apply updates and to configure self-updating options, the Remote Shutdown Windows Firewall rule group is automatically enabled on each cluster node.

Note

The Remote Shutdown Windows Firewall rule group cannot be enabled when it will conflict with Group Policy settings that are configured for Windows Firewall. The Remote Shutdown firewall rule group is also enabled by specifying the –EnableFirewallRules parameter when running the following CAU cmdlets: Add-CauClusterRole, Invoke-CauRun, and SetCauClusterRole.

The following PowerShell example shows an additional method to enable automatic restarts on a cluster node.

Set-NetFirewallRule -Group "@firewallapi.dll,-36751" -Profile Domain -Enabled true

Enable Windows Management Instrumentation (WMI)

All cluster nodes must be configured for remote management using Windows Management Instrumentation (WMI). This is enabled by default.

To manually enable remote management, do the following:

- In the Services console, start the Windows Remote Management service and set the startup type to Automatic.

- Run the Set-WSManQuickConfig cmdlet, or run the following command from an elevated command prompt:

winrm quickconfig -q

To support WMI remoting, if Windows Firewall is in use on the cluster nodes, the inbound firewall rule for Windows Remote Management (HTTP-In) must be enabled on each node. By default, this rule is enabled.

Enable Windows PowerShell and Windows PowerShell remoting

To enable self-updating mode and certain CAU features in remote-updating mode, PowerShell must be installed and enabled to run remote commands on all cluster nodes. By default, PowerShell is installed and enabled for remoting. To enable PowerShell remoting, use one of the following methods:

- Run the Enable-PSRemoting cmdlet.

- Configure a domain-level Group Policy setting for Windows Remote Management (WinRM).

For more information about enabling PowerShell remoting, see About Remote Requirements.

Install .NET Framework 4.6 or 4.5

To enable self-updating mode and certain CAU features in remote-updating mode,.NET Framework 4.6, or .NET Framework 4.5 (on Windows Server 2012 R2) must be installed on all cluster nodes. By default, NET Framework is installed.

To install .NET Framework 4.6 (or 4.5) using PowerShell if it’s not already installed, use the following command:

Install-WindowsFeature -Name NET-Framework-45-Core

Best practices recommendations for using Cluster-Aware Updating

We recommend that when you begin to use CAU to apply updates with the default Microsoft.WindowsUpdatePlugin plug-in on a cluster, you stop using other methods to install software updates from Microsoft on the cluster nodes.

Caution

Combining CAU with methods that update individual nodes automatically (on a fixed time schedule) can cause unpredictable results, including interruptions in service and unplanned downtime. We recommend that you follow these guidelines: for optimal results, we recommend that you disable settings on the cluster nodes for automatic updating, for example, through the Automatic Updates settings in Control Panel, or in settings that are configured using Group Policy.

Caution

Automatic installation of updates on the cluster nodes can interfere with installation of updates by CAU and can cause CAU failures. If they are needed, the following Automatic Updates settings are compatible with CAU, because the administrator can control the timing of update installation:

- Settings to notify before downloading updates and to notify before installation

- Settings to automatically download updates and to notify before installation

However, if Automatic Updates is downloading updates at the same time as a CAU Updating Run, the Updating Run might take longer to complete.

Do not configure an update system such as Windows Server Update Services (WSUS) to apply updates automatically (on a fixed time schedule) to cluster nodes. All cluster nodes should be uniformly configured to use the same update source, for example, a WSUS server, Windows Update, or Microsoft Update.

If you use a configuration management system to apply software updates to computers on the network, exclude cluster nodes from all required or automatic updates. Examples of configuration management systems include Microsoft Endpoint Configuration Manager and Microsoft System Center Virtual Machine Manager 2008.

If internal software distribution servers (for example, WSUS servers) are used to contain and deploy the updates, ensure that those servers correctly identify the approved updates for the cluster nodes.