What is a React Native for Windows app?

When you create a React Native for Windows app targeting React Native’s old architecture, you will get a Universal Windows Platform app (aka UWP app).

The Universal Windows Platform allows you to access a set of common functionality on all Windows devices via the Windows Runtime (WinRT). WinRT APIs can be accessed from C++ (via C++/WinRT), or via .NET C#.

WinRT support in .NET

The current publicly supported version of .NET (.NET UWP) has built-in support for WinRT.

Win32 Desktop apps vs. RNW apps

Whether you are new to Windows development, or you are a Win32 desktop app veteran, the following FAQs should answer some common questions.

When you add Windows support to a React Native app via the steps described in Get Started with Windows, you will get a UWP app.

Note: By default the init-windows command creates a C++ app, however it is possible to create a C# app instead. The choice of language can impact your performance and your capacity to consume native modules, so if either of those issues are important to you, it’s highly recommended that you read Choosing C++ or C# for native code.

Regardless of the language of your app RNW apps are UWP apps and therefore have the following characteristics:

API surface

The set of APIs these app can access are a subset of all Windows APIs (i.e. those accessible via WinRT). See:

- Win32 and COM APIs for UWP apps

- CRT functions not supported in Universal Windows Platform apps

- Alternatives to Windows APIs in Universal Windows Platform (UWP) apps

Isolation

The app runs inside of an app container — a type of sandbox. This provides apps with a secure way to install, access system resources like the filesystem, and lets the system manage their lifetime (e.g. suspending the app when it isn’t on the foreground). This means that by default an RNW app cannot access arbitrary filesystem locations, start arbitrary processes, etc. UWP apps that need to access these kinds of capabilities may be able to do so via App capability declarations.

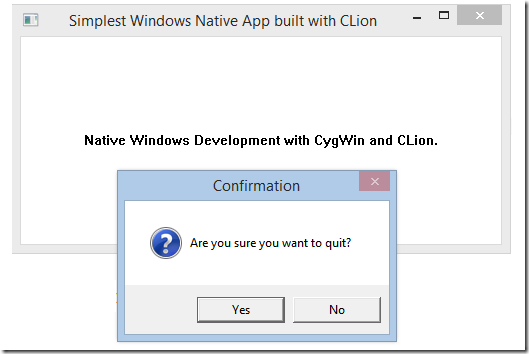

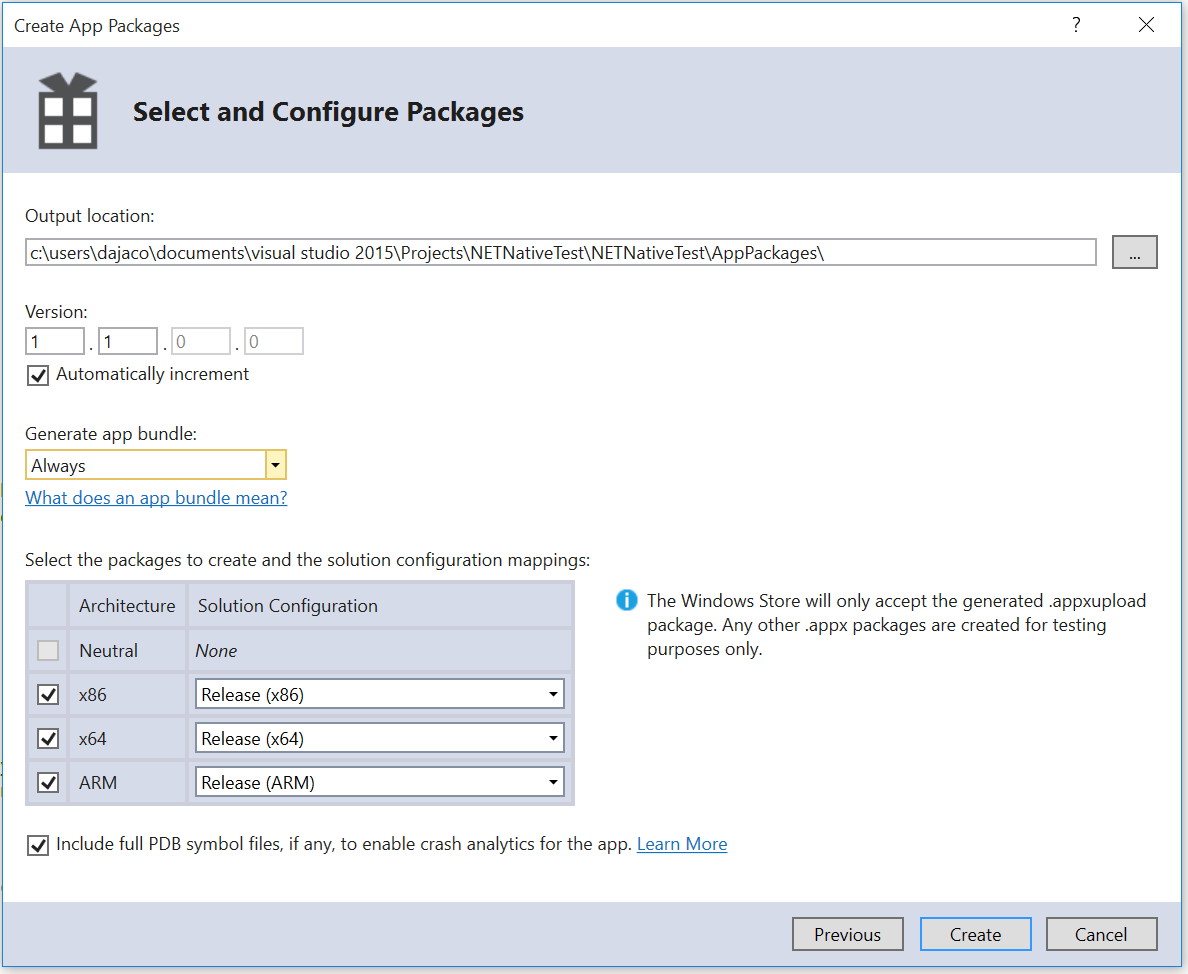

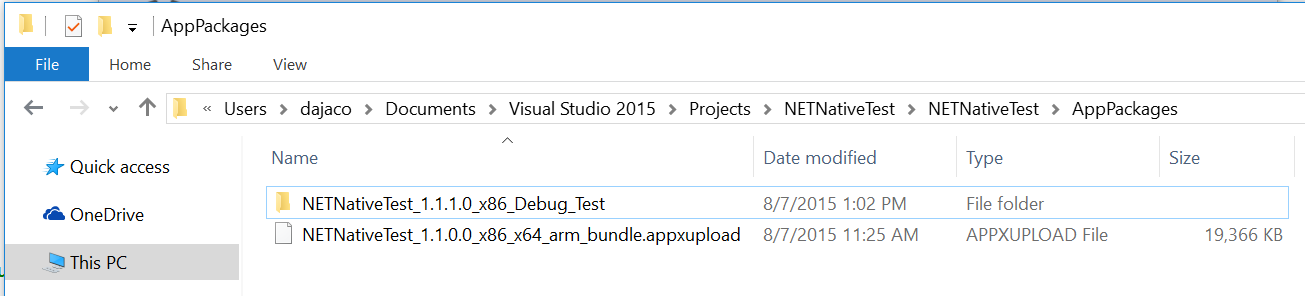

Packaging

React Native Windows apps are signed and packaged. Packaging is a mechanism through which an app and its dependencies acquire an identity, which is used to determine whether API calls that require system capabilities (e.g. filesystem access) should succeed or not.

Distribution

React Native Windows apps can be distributed, installed and updated in the following ways:

- via the Microsoft Store.

- via your private Store if you are a business or educational organization. See also Distribute LOB apps to enterprises.

- using App Installer.

It’s worth noting that you cannot just «copy an EXE» as the app package contains more than just the main executable, including an app manifest, assets, dependent framework libraries, etc.

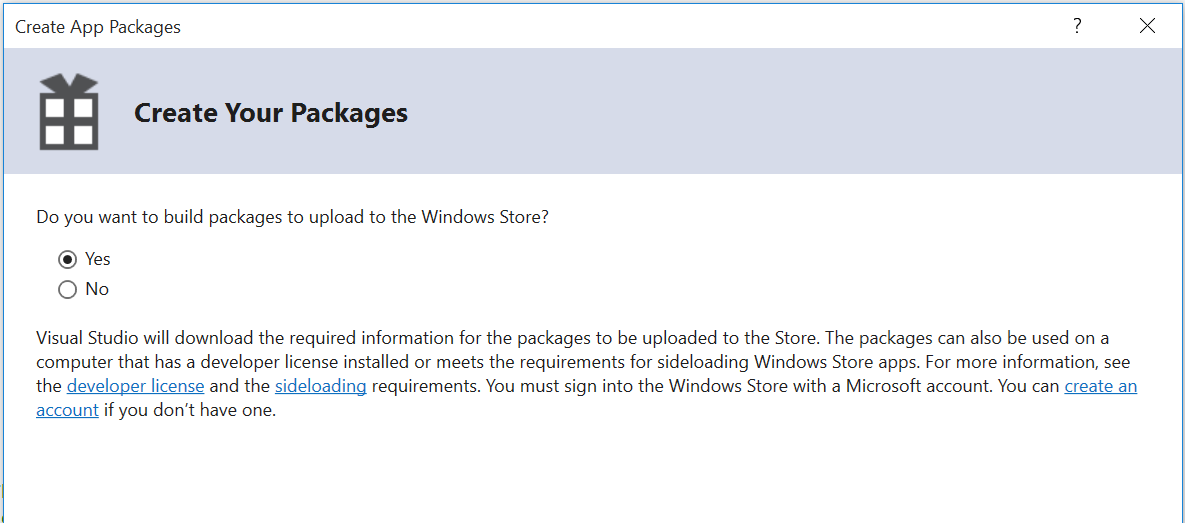

In addition, the Store submission process has these requirements:

- UWP apps submitted to the store must pass Windows App Certification Kit (WACK) validation.

- UWP apps written in C# or other managed languages submitted to the store must be built using the .NET Native toolchain. This is the default when building C# apps in Release mode, but not in Debug, so apps built in Debug will be rejected by the Store.

Use of non-WinRT libraries

Any libraries you use should be built as WinRT components. In other words, you cannot easily link libraries built for Win32 desktop apps without additional work.

- C++/CX is a dialect of C++ that allows writing UWP apps, however this is not supported for writing a RNW app. The article How to use existing C++ code in a Universal Windows Platform app talks about how to consume non-WinRT libraries in a WinRT context using C++/CX, but most of the content should be applicable to using C++/WinRT which is the supported way to write RNW apps.

- See also the guide for moving from C++/CX to C++/WinRT.

- Libraries built for .NET desktop framework cannot be directly accessed by UWP. You can create a .NET Standard library that calls into the .NET framework one, and call from the UWP app into the .NET Standard middleware.

Local testing and inner loop

For internal development, you can deploy your app for test purposes by side-loading and deploying via loose-file registration. When building in Debug mode (which is the default), the run-windows command performs loose-file registration of your app in order to install it locally. When building with the --release switch, the CLI will install the real package onto your local machine. This requires the app to be signed and for the certificate it uses for signing to be trusted by the machine the app is going to be installed on. See Create a certificate for package signing and Intro to certificates.

Debugging crashes and reporting issues

If your app is «hard crashing» (the native code hits an error condition and your app closes), you will want to investigate the native side of the code. If the issue is in the Microsoft.ReactNative layer, please file a bug in the React Native for Windows repo, and provide a native stack trace, and ideally a crash dump with symbols.

For your convenience, you can use a script to collect a native crash dump and stack traces. Here are the instructions:

- Download the script at https://aka.ms/RNW/analyze-crash.ps1, for example to C:\temp

- Open an admin PowerShell

- If you haven’t enabled running unsigned scripts yet, do that by running:

Set-ExecutionPolicy Unrestricted - Run the script and pass it the name of your app’s exe (usually it will be your app’s name):

C:\temp\analyze-crash.ps1 -ExeName MyApp

The script will set up automatic crash dump collection for your app, download the native debugging tools (including the command line debugger cdb), and ask you to reproduce the crash.

At this point you can launch the app (e.g. from Start menu if you’ve already deployed it to the local device). When the app crashes, it will generate a crash dump. You can then press enter to resume execution of the script, and the script will use cdb to automatically analyze the crash dump, and output the results to a file analyze.log.

The script will then copy the contents of the log to the clipboard, open the log file in notepad, and launch the browser to file an issue in the react-native-windows repo, where you can paste the stack trace into the bug template.

- Windows Native Development Environment Setup Guide for Linux Users

- Introduction

- Installing Visual Studio, Some Packages and Scoop

- WinGet and Scoop notes

- Configure the Terminal

- Terminal Usage

- Scrolling and Searching in the Terminal

- Transparency (Old Method)

- Setting up an Editor

- Setting up Vim

- Neovim Terminal

- Setting up nano

- Setting up Vim

- Setting up PowerShell

- Setting up ssh

- Setting up and Using Git

- Git Setup

- Using Git

- Dealing with Line Endings

- Setting up gpg

- Profile (Home) Directory Structure

- PowerShell Usage Notes

- Introduction

- Finding Documentation

- Commands, Parameters and Environment

- Values, Arrays and Hashes

- Redirection, Streams, $input and Exit Codes

- Command/Expression Sequencing Operators

- Commands and Operations on Filesystems and Filesystem-Like Objects

- Pipelines

- The Measure-Object Cmdlet

- Sub-Expressions and Strings

- Script Blocks and Scopes

- Using and Writing Scripts

- Writing Simple Modules

- Miscellaneous Usage Tips

- Elevated Access (sudo)

- Using PowerShell Gallery

- Available Command-Line Tools and Utilities

- Using BusyBox

- Using MSYS2

- Using GNU Make

- Using tmux with PowerShell

- Creating Scheduled Tasks (cron)

- Working With virt-manager VMs Using virt-viewer

- Using X11 Forwarding Over SSH

- Mounting SMB/SSHFS Folders

- Appendix A: Chocolatey Usage Notes

- Chocolatey Filesystem Structure

- Appendix B: Using tmux with PowerShell from WSL

Windows Native Development Environment Setup Guide for Linux Users

Introduction

This guide is intended for experienced developers familiar with

Linux or other UNIX-like operating systems who want to set up a

native Windows terminal development environment. I will walk you

through setting up and using the package manager, terminal, vim,

gpg, git, ssh, Visual Studio build tools, and PowerShell. I will

explain basic PowerShell usage which will allow you to use it as a

shell and write simple scripts.

This is a work in progress and there are sometimes typos and

grammatical or ordering mistakes as I keep editing it, or bugs in

the $profile or setup code, so make any

necessary adjustments.

I am planning to make many more expansions covering for example

things like using cmake with vcpkg or Conan etc..

Your feedback via issues or pull requests on Github is appreciated.

Installing Visual Studio, Some Packages and Scoop

Make sure developer mode is turned on in Windows settings, this is necessary for

making unprivileged symlinks. Also in developer settings, change powershell

execution policy to RemoteSigned.

- Press Win+X and open PowerShell (Administrator).

Run this script, which is in the repo, like so:

, it installs some WinGet packages, the Visual Studio C++ workload, sets up the

OpenSSH server and sets some QOL improvement settings.

If you want to use the Chocolatey package manager instead of WinGet and Scoop,

see Appendix A: Chocolatey Usage Notes.

[environment]::setenvironmentvariable('POWERSHELL_UPDATECHECK', 'off', 'machine') set-service beep -startuptype disabled echo Microsoft.VisualStudio.2022.Community 7zip.7zip gsass1.NTop Git.Git ` GnuPG.GnuPG SourceFoundry.HackFonts Neovim.Neovim OpenJS.NodeJS ` Notepad++.Notepad++ Microsoft.Powershell Python.Python.3.13 ` SSHFS-Win.SSHFS-Win Microsoft.OpenSSH.Beta Microsoft.WindowsTerminal | %{ winget install $_ } iwr https://aka.ms/vs/17/release/vs_community.exe -outfile vs_community.exe ./vs_community.exe --passive --add 'Microsoft.VisualStudio.Workload.NativeDesktop;includeRecommended;includeOptional' start-process powershell '-noprofile', '-windowstyle', 'hidden', ` '-command', "while (test-path $pwd/vs_community.exe) { sleep 5; ri -fo $pwd/vs_community.exe }" new-itemproperty -path "HKLM:\SOFTWARE\OpenSSH" -name DefaultShell -value '/Program Files/PowerShell/7/pwsh.exe' -propertytype string -force > $null $sshd_conf = '/programdata/ssh/sshd_config' $conf = gc $sshd_conf | %{ $_ -replace '^([^#].*administrators.*)','#$1' } $conf | set-content $sshd_conf set-service sshd -startuptype automatic set-service ssh-agent -startuptype automatic restart-service -force sshd restart-service -force ssh-agent

. If winget exits abnormally, update this app from the Windows

Store:

https://apps.microsoft.com/detail/9nblggh4nns1

. If something fails in the script, run it again until everything

succeeds.

- Press Win+X and open PowerShell (NOT Administrator)

Now run the user-mode install script:

, which installs Scoop and some Scoop packages of UNIX ports, and fixes your

~/.ssh files permissions, copy it over first, but you can do this

later as well.

ni -it sym ~/.config -tar ($env:USERPROFILE + '\AppData\Local') -ea ignore if (-not (test-path ~/scoop)) { iwr get.scoop.sh | iex } function scoop { & ~/scoop/apps/scoop/current/bin/scoop.ps1 @args } # BusyBox must be first in the installation order. scoop install busybox-lean base64 bc bind bzip2 dd diffutils dos2unix file gawk gettext grep gzip ipcalc less make openssl perl ripgrep sed tar zip unzip wget 'arch ash basename cal cksum clear comm cp cpio cut date df dirname dpkg dpkg-deb du echo ed env expand expr factor false find fold fsync ftpget ftpput getopt hd head hexdump httpd ln logname lzcat lzma lzop lzopcat md5sum mktemp mv nc nl od paste pgrep pidof pipe_progress printenv printf ps pwd readlink realpath reset rev rm rmdir rpm rpm2cpio seq sh sha1sum sha256sum sha3sum sha512sum shred shuf sleep sort split ssl_client stat sum tac tail tee test time timeout touch tr true truncate ts ttysize uname uncompress unexpand uniq unlink unlzma unlzop unxz usleep uudecode uuencode vi watch wc which xargs xxd xz xzcat yes zcat'.split(' ') | %{ scoop shim add $_ busybox $_ } scoop bucket add extras scoop install mpv scoop bucket add nerd-fonts scoop install DejaVuSansMono-NF &(resolve-path /prog*s/openssh*/fixuserfilepermissions.ps1) import-module -force (resolve-path /prog*s/openssh*/opensshutils.psd1) repair-authorizedkeypermission -file ~/.ssh/authorized_keys

.

WinGet and Scoop notes

To update your WinGet packages, run this in either a user or admin PowerShell:

, to update your Scoop packages, run this in a normal user

PowerShell:

. Never run scoop in an elevated shell, only as the user.

Use winget search and scoop search to look for packages, and install to

install them, and list to see locally installed packages.

To completely uninstall Scoop, run start (gi ~), select the scoop directory

in your profile directory in the Explorer window and press SHIFT+DEL to wipe

it. You may want to do this if you screw up your installation or want to run a

newer version of the user install

script.

Configure the Terminal

Launch the Windows Terminal and choose Settings from the tab

drop-down, this will open the settings json in visual studio.

In the global settings, above the "profiles" section, add:

. In the "profiles" "defaults" section add:

. The settings useAcrylic and opacity make the terminal

transparent, leave those out or set opacity to 100 to turn this

off.

I prefer the ‘SF Mono’ font which you can get here:

https://github.com/supercomputra/SF-Mono-Font

. Other fonts you might like are IBM Plex Mono which you can

install from:

https://github.com/IBM/plex

, and ‘DejaVu Sans Mono’ which was in the list of

packages.

The Terminal also comes with a nice new Microsoft font called

«Cascadia Code», if you leave out the "face": "<name>", line, it

will use it instead.

You can get the newest version of Cascadia Code and the version with

Powerline glyphs called «Cascadia Code PL» from here:

https://github.com/microsoft/cascadia-code/releases?WT.mc_id=-blog-scottha

, you will need it if you decide to use the oh-my-posh prompt

described here.

If you want a font that is very legible at very small sizes for more screen

real estate, try:

https://github.com/koemaeda/gohufont-ttf

, install just the uni-11.ttf file.

This font looks terrible with the default ClearType settings, so you will want

to run the ClearType Tuner and choose the faintest least-sharp variants. Another

option is to use MacType which makes fonts use

greyscale antialiasing.

In the profile list section, in the entry that lists:

, add this:

. You can do the same for the «Windows PowerShell» profile if you

like.

In the "actions" section add these keybindings:

. And REMOVE the CTRL+V binding, if you want to use CTRL+V

in vim (visual line selection.)

This gives you a sort of «tmux» for PowerShell using tabs, and binds

keys to find next/previous match.

Note that CTRL+SHIFT+N is bound by default to opening a new window

and CTRL+SHIFT+P is bound by default to opening the command

palette, if you need these, rebind them or the original actions to

something else.

Restart the terminal.

Terminal Usage

You can toggle full-screen mode with F11.

SHIFT+ALT++ will open a split pane vertically, while

SHIFT+ALT+- will open a split pane horizontally. This works in

full-screen as well.

You can paste with the right mouse button, SHIFT+INSERT and

CTRL+SHIFT+V. To copy text with "copyOnSelect" enabled, simply

select it, or press CTRL+SHIFT+C otherwise.

The documentation for the terminal and a lot of other good information is here:

https://docs.microsoft.com/en-us/windows/terminal/

.

Scrolling and Searching in the Terminal

These are the scrolling keybinds available with this configuration:

| Key | Action |

|---|---|

| CTRL+SHIFT+PGUP | Scroll one page up. |

| CTRL+SHIFT+PGDN | Scroll one page down. |

| CTRL+SHIFT+UP | Scroll X lines up. |

| CTRL+SHIFT+DOWN | Scroll X lines down. |

CTRL+SHIFT+UP/DOWN will scroll by 1 line, you can change this to

any number of lines by adjusting the rowsToScroll parameter. You

can even make additional keybindings for the same action but a

different keybind with a different rowsToScroll value.

You can scroll with your mouse scrollwheel, assuming that there is

no active application controlling the mouse.

For searching scrollback with this configuration, follow the

following process:

- Press

CTRL+SHIFT+Fand type in your search term in the search

box that pops up in the upper right, the term is

case-insensitive. - Press

ESCto close the search box. - Press

CTRL+SHIFT+Nto find the first match going up, the match

will be highlighted. - Press

CTRL+SHIFT+Pto find the first match going down below the

current match. - To change the search term, press

CTRL+SHIFT+Fagain, type in

the new term, and pressESC.

You can scroll the terminal while a search is active and your match

position will be preserved.

Transparency (Old Method)

The transparency configuration in the terminal described

above works correctly with neovim but not

regular vim. For older versions of Terminal or to get transparency

in regular vim, use the autohotkey method described here. You can

install autohotkey from WinGet using the id AutoHotkey.AutoHotkey.

This is the autohotkey script:

#NoEnv SendMode Input SetWorkingDir %A_ScriptDir% ; Toggle window transparency. #^Esc:: WinGet, TransLevel, Transparent, A If (TransLevel = 255) { WinSet, Transparent, 180, A } Else { WinSet, Transparent, 255, A } return

. This will toggle transparency in a window when you press

CTRL+WIN+ESC, you have to press it twice the first time.

Thanks to @munael for this tip.

Note that this will not work for the Administrator PowerShell window

unless you run AutoHotkey with Administrator privileges, you can do

that on logon by creating a task in the Task Scheduler.

Setting up an Editor

In this section I will describe how to set up a couple of editors.

You can also edit files in the Visual Studio IDE using the devenv command.

You can use notepad which is in your $env:PATH already or

notepad++.

If you want a very simple terminal editor that is easy to use, you

can use nano, it has nice syntax highlighting

too.

Make sure $env:EDITOR is set to the executable or .bat file that

launches your editor with backslashes replaced with forward slashes

and make sure that it does not contain any spaces. Set it in your

$profile so that git can use it for

commit messages. For example:

$private:nano = resolve-path ~/.local/bin/nano.exe $env:EDITOR = $nano -replace '\\','/'

. This will also work well with things you use from UNIX-compatible

environments like Cygwin, MSYS2, etc. if you end up doing that.

The profile function shortpath will do this for you.

Another option is to set it in Git config, which will override the

environment variables, for example:

get config --global core.editor (get-command notepad++).source

.

Setting up Vim

I recommend using Neovim on Windows because it has working mouse

support and is almost 100% compatible with vim. It also works

correctly with transparency in Windows Terminal with a black

background unlike the port of regular vim.

If you want to use the regular vim, the WinGet id is vim.vim.

If you are using neovim only, you can copy your ~/.config/nvim

over directly, to ~/AppData/Local/nvim.

You can edit your powershell profile with vim $profile, and reload

it with . $profile.

Look at the included $profile for how to

set up a vim alias and set $env:EDITOR so that it will work with

Git.

Some suggestions for your ~/.vimrc, all of this works in both

vims:

set encoding=utf8 set langmenu=en_US.UTF-8 language en let g:is_bash=1 set formatlistpat=^\\s*\\%([-*][\ \\t]\\\|\\d+[\\]:.)}\\t\ ]\\)\\s* set ruler bg=dark nohlsearch bs=2 noea ai fo+=n undofile belloff=all modeline modelines=5 set fileformats=unix,dos set mouse=a set clipboard=unnamedplus " Add vcpkg includes to include search path to get completions for C++. if isdirectory($HOME . 'source/repos/vcpkg/installed/x64-windows/include') let &path .= ',' . $HOME . 'source/repos/vcpkg/installed/x64-windows/include' endif if isdirectory($HOME . 'source/repos/vcpkg/installed/x64-windows-static/include') let &path .= ',' . $HOME . 'source/repos/vcpkg/installed/x64-windows-static/include' endif if !has('gui_running') && match($TERM, "screen") == -1 set termguicolors au ColorScheme * hi Normal ctermbg=0 endif if has('gui_running') au ColorScheme * hi Normal guibg=#000000 if has('win32') set guifont=Cascadia\ Code:h11:cANSI endif endif if has('win32') || has('gui_win32') if executable('pwsh') set shell=pwsh else set shell=powershell endif set shellquote= shellpipe=\| shellredir=> shellxquote= set shellcmdflag=-nologo\ -noprofile\ -executionpolicy\ remotesigned\ -noninteractive\ -command endif filetype plugin indent on syntax enable au BufRead COMMIT_EDITMSG,*.md setlocal spell au BufRead *.md setlocal tw=80 au FileType json setlocal ft=jsonc sw=4 et if has('nvim') au TermOpen,TermEnter * startinsert endif " Return to last edit position when opening files. autocmd BufReadPost * \ if line("'\"") > 0 && line("'\"") <= line("$") | \ exe "normal! g`\"" | \ endif " Fix syntax highlighting on CTRL+L. noremap <C-L> <Esc>:syntax sync fromstart<CR>:redraw<CR> inoremap <C-L> <C-o>:syntax sync fromstart<CR><C-o>:redraw<CR> " Markdown let g:markdown_fenced_languages = ['css', 'javascript', 'js=javascript', 'json=javascript', 'jsonc=javascript', 'xml', 'ps1', 'powershell=ps1', 'sh', 'bash=sh', 'autohotkey', 'vim', 'sshconfig', 'dosbatch', 'gitconfig']

. You can use Plug or pathogen or whatever you prefer to install

plugins.

I highly recommend subscribing to GitHub Copilot and using the vim

plugin which you can get here:

https://github.com/github/copilot.vim

. I use this color scheme, which is a fork of Apprentice for black

backgrounds:

https://github.com/rkitover/Apprentice

You’ll probably want the PowerShell support for vim including syntax

highlighting which is here:

https://github.com/PProvost/vim-ps1

. I also use vim-sleuth to detect indent settings and vim-markdown

for better markdown support including syntax highlighting in code

blocks.

Neovim Terminal

Neovim has a built-in terminal that works perfectly on Windows, when running in

tmux too. You can open a terminal window on the bottom with :botright terminal. Enter insert mode to use the terminal and press C-\ C-n to return

to normal mode.

You can make a mapping to make this more convenient, for example:

noremap <leader>t :botright terminal<CR>

, which would make the mapping \t by default or whatever you set mapleader

to. When the terminal process exists, press Enter to close the window. See

:help terminal for more information.

Neovim will refuse to quit if a terminal process is running, in which case, save

your files and use :qa! to force quit.

Setting up nano

Run this script, from this repo using:

, this is the script:

$erroractionpreference = 'stop' $releases = 'https://files.lhmouse.com/nano-win/' ri -r -fo ~/nano-installer -ea ignore mkdir ~/nano-installer | out-null pushd ~/nano-installer curl -sLO ($releases + ( iwr -usebasicparsing $releases | % links | ? href -match '\.7z$' | select -last 1 | % href )) 7z x nano*.7z | out-null mkdir ~/.local/bin -ea ignore | out-null cpi -fo pkg_x86_64*/bin/nano.exe ~/.local/bin mkdir ~/.nano -ea ignore | out-null git clone https://github.com/scopatz/nanorc *> $null gci -r nanorc -i *.nanorc | cpi -dest ~/.nano popd ("include `"" + (($env:USERPROFILE -replace '\\','/') ` -replace '^[^/]+','').tolower() + ` "/.nano/*.nanorc`"") >> ~/.nanorc ri -r -fo ~/nano-installer gi ~/.nanorc,~/.nano,~/.local/bin/nano.exe

. Make sure ~/.local/bin is in your $env:PATH and set

$env:EDITOR in your $profile as follows:

$env:EDITOR = (get-command nano).source -replace '\\','/'

, or configure Git like so:

git config --global core.editor (get-command nano).source

.

Setting up PowerShell

To install the pretty oh-my-posh prompt, run this:

winget install jandedobbeleer.ohmyposh

, the profile below will set it up for you. You will need a font

with Powerline glyphs, like «Cascadia Code PL», see setting up the

terminal.

If you want to use my

posh-git theme, install the

module

posh-git-theme-bluelotus

from PSGallery.

You can also install posh-git

and make your own

customizations.

Here is a profile to get you started, it has a few examples of

functions and aliases which you will invariably write for yourself.

To edit your $profile, you can do:

, or

. If you cloned this repo, you can dot-source mine in yours by

adding this:

. ~/source/repos/windows-dev-guide/profile.ps1

, you can also link or copy this profile to yours and add your own

things in ~/Documents/PowerShell/private-profile.ps1, which will

be automatically read with the path set in $profile_private.

Or just copy the parts you are interested in to yours.

# Windows PowerShell does not have OS automatic variables. if (-not (test-path variable:global:iswindows)) { $global:IsWindows = $false $global:IsLinux = $false $global:IsMacOS = $false if (get-command get-cimsession -ea ignore) { $global:IsWindows = $true } elseif (test-path /System/Library/Extensions) { $global:IsMacOS = $true } else { $global:IsLinux = $true } } import-module packagemanagement,powershellget if ($iswindows) { [Console]::OutputEncoding = [Console]::InputEncoding ` = $OutputEncoding = new-object System.Text.UTF8Encoding set-executionpolicy -scope currentuser remotesigned [System.Globalization.CultureInfo]::CurrentCulture = 'en-US' if ($private:chocolatey_profile = resolve-path ( "$env:chocolateyinstall\helpers\chocolateyprofile.psm1"` ) -ea ignore) { import-module $chocolatey_profile } if (get-command -ea ignore update-sessionenvironment) { update-sessionenvironment } # Tell Chocolatey to not add code to $profile. $env:ChocolateyNoProfile = 'yes' } elseif (-not $env:LANG) { $env:LANG = 'en_US.UTF-8' } # Make help nicer. $psdefaultparametervalues["get-help:full"] = $true $env:PAGER = 'less' # Turn on these options for less: # -Q,--QUIET # No bells. # -r,--raw-control-chars # Show ANSI colors. # -X,--no-init # No term init, does not use alt screen. # -F,--quit-if-one-screen # -K,--quit-on-intr # Quit on CTRL-C immediately. # --mouse # Scroll with mouse wheel. $env:LESS = '-Q$-r$-X$-F$-K$--mouse' new-module MyProfile -script { $path_sep = [system.io.path]::pathseparator $dir_sep = [system.io.path]::directoryseparatorchar $global:ps_share_dir = if ($iswindows) { '~/AppData/Roaming/Microsoft/Windows/PowerShell' } else { '~/.local/share/powershell' } function split_env_path { $env:PATH -split $path_sep | ? length | %{ resolve-path $_ -ea ignore | % path } | ? length } function curdrive { if ($iswindows) { $pwd.drive.name + ':' } } function trim_curdrive($str) { if (-not $str) { $str = $input } if (-not $iswindows) { return $str } $str -replace ('^'+[regex]::escape((curdrive))),'' } function home_to_tilde($str) { if (-not $str) { $str = $input } $home_dir_re = [regex]::escape($home) $dir_sep_re = [regex]::escape($dir_sep) $str -replace ('^'+$home_dir_re+"($dir_sep_re"+'|$)'),'~$1' } function backslashes_to_forward($str) { if (-not $str) { $str = $input } if (-not $iswindows) { return $str } $str -replace '\\','/' } function global:remove_path_spaces($path) { if (-not $path) { $path = $($input) } if (-not $iswindows) { return $path } if (-not $path) { return $path } $parts = while ($path -notmatch '^\w+:[\\/]$') { $leaf = split-path -leaf $path $path = split-path -parent $path $fs = new-object -comobject scripting.filesystemobject if ($leaf -match ' ') { $leaf = if ((gi "${path}/$leaf").psiscontainer) { split-path -leaf $fs.getfolder("${path}/$leaf").shortname } else { split-path -leaf $fs.getfile("${path}/$leaf").shortname } } $leaf.tolower() } if ($parts) { [array]::reverse($parts) } $path = $path -replace '[\\/]+', '' $path + '/' + ($parts -join '/') } function global:shortpath($str) { if (-not $str) { $str = $($input) } $str | resolve-path -ea ignore | % path ` | remove_path_spaces | trim_curdrive | backslashes_to_forward } function global:realpath($str) { if (-not $str) { $str = $($input) } $str | resolve-path -ea ignore | % path ` | remove_path_spaces | backslashes_to_forward } function global:syspath($str) { if (-not $str) { $str = $($input) } $str | resolve-path -ea ignore | % path } if ($iswindows) { # Replace OneDrive Documents path in $profile with ~/Documents # symlink, if you have one. if ((gi ~/Documents -ea ignore).target -match 'OneDrive') { $global:profile = $profile -replace 'OneDrive\\','' } # Remove Strawberry Perl MinGW stuff from PATH. $env:PATH = (split_env_path | ?{ $_ -notmatch '\bStrawberry\\c\\bin$' } ) -join $path_sep # Add npm module bin wrappers to PATH. if (resolve-path ~/AppData/Roaming/npm -ea ignore) { $env:PATH += ';' + (gi ~/AppData/Roaming/npm) } } $global:profile = $profile | shortpath $global:ps_config_dir = split-path $profile -parent $global:ps_history = "$ps_share_dir/PSReadLine/ConsoleHost_history.txt" if ($iswindows) { $global:terminal_settings = resolve-path ~/AppData/Local/Packages/Microsoft.WindowsTerminal_*/LocalState/settings.json -ea ignore | shortpath $global:terminal_settings_preview = resolve-path ~/AppData/Local/Packages/Microsoft.WindowsTerminalPreview_*/LocalState/settings.json -ea ignore | shortpath if (-not $global:terminal_settings -and $global:terminal_settings_preview) { $global:terminal_settings = $global:terminal_settings_preview } } $extra_paths = @{ prepend = '~/.local/bin' append = '~/AppData/Roaming/Python/Python*/Scripts', '/program files/VcXsrv' } foreach ($section in $extra_paths.keys) { foreach ($path in $extra_paths[$section]) { if (-not ($path = resolve-path $path -ea ignore)) { continue } if (-not ((split_env_path) -contains $path)) { $env:PATH = $(if ($section -eq 'prepend') { $path,$env:PATH } else { $env:PATH,$path }) -join $path_sep } } } if (-not $env:TERM) { $env:TERM = 'xterm-256color' } elseif ($env:TERM -match '^(xterm|screen|tmux)$') { $env:TERM = $matches[0] + '-256color' } if (-not $env:COLORTERM) { $env:COLORTERM = 'truecolor' } if (-not $env:ENV) { $env:ENV = shortpath ~/.shrc } if (-not $env:VCPKG_ROOT) { $env:VCPKG_ROOT = resolve-path ~/source/repos/vcpkg -ea ignore } if ($iswindows) { # Load VS env only once. :OUTER foreach ($vs_year in '2022','2019','2017') { foreach ($vs_type in 'preview','buildtools','community') { foreach ($x86 in '',' (x86)') { $vs_path="/program files${x86}/microsoft visual studio/${vs_year}/${vs_type}/Common7/Tools" if (test-path $vs_path) { break OUTER } else { $vs_path=$null } } } } if ($vs_path) { $default_host_arch,$default_arch = if ($env:PROCESSOR_ARCHITECTURE -ieq 'AMD64') { 'amd64','amd64' } elseif ($env:PROCESSOR_ARCHITECTURE -ieq 'ARM64') { 'arm64','arm64' } elseif ($env:PROCESSOR_ARCHITECTURE -ieq 'X86') { 'x86','x86' } function global:vsenv($arch, $hostarch) { if (-not $arch) { $arch = $default_arch } if (-not $hostarch) { $hostarch = $default_host_arch } $saved_vcpkg_root = $env:VCPKG_ROOT & $vs_path/Launch-VsDevShell.ps1 -hostarch $hostarch -arch $arch -skipautomaticlocation if ($saved_vcpkg_root) { $env:VCPKG_ROOT = $saved_vcpkg_root } } vsenv $default_arch $default_host_arch } } if ($env:VCPKG_ROOT -and (test-path $env:VCPKG_ROOT)) { $global:vcpkg_toolchain = $env:VCPKG_ROOT + '/scripts/buildsystems/vcpkg.cmake' if ($iswindows) { $env:VCPKG_DEFAULT_TRIPLET = if (test-path $env:VCPKG_ROOT/installed/${env:Platform}-windows-static) ` { "${env:Platform}-windows-static" } else { "${env:Platform}-windows" } $env:LIB = $env:LIB + ';' + $env:VCPKG_ROOT + '/installed/' + $env:VCPKG_DEFAULT_TRIPLET + '/lib' $env:INCLUDE = $env:INCLUDE + ';' + $env:VCPKG_ROOT + '/installed/' + $env:VCPKG_DEFAULT_TRIPLET + '/include' } } if (-not $env:DISPLAY) { $env:DISPLAY = '127.0.0.1:0.0' } if (-not $env:XAUTHORITY) { $env:XAUTHORITY = join-path $home .Xauthority if (-not (test-path $env:XAUTHORITY) ` -and ( ($xauth = (get-command -commandtype application xauth -ea ignore).source) ` -or ($xauth = (gi '/program files/VcXsrv/xauth.exe' -ea ignore).fullname) ` )) { $cookie = (1..4 | %{ "{0:x8}" -f (get-random) }) -join '' xauth add ':0' . $cookie | out-null } } function global:megs { if (-not $args) { $args = $input } gci @args | select mode, lastwritetime, @{ name="MegaBytes"; expression={ [math]::round($_.length / 1MB, 2) }}, name } function global:cmconf { sls 'CMAKE_BUILD_TYPE|VCPKG_TARGET_TRIPLET|UPSTREAM_RELEASE' CMakeCache.txt } function global:cmclean { ri -r CMakeCache.txt,CMakeFiles -ea ignore } # Windows PowerShell does not have Remove-Alias. function global:rmalias($alias) { # Use a loop to remove aliases from all scopes. while (test-path "alias:\$alias") { ri -force "alias:\$alias" } } function is_ext_cmd($cmd) { (get-command $cmd -ea ignore).commandtype ` -cmatch '^(Application|ExternalScript)$' } # Check if invocation of external command works correctly. function ext_cmd_works($exe) { $works = $false if (-not (is_ext_cmd $exe)) { write-error 'not an external command' -ea stop } $($input | &$exe @args | out-null; $works = $?) 2>&1 ` | sv err_out $works -and -not $err_out } function global:%? { $input | %{ $_ } | ?{ $_ } } function global:which { $cmd = try { get-command @args -ea stop | select -first 1 } catch { write-error $_ -ea stop } if (is_ext_cmd $cmd) { $cmd = $cmd.source | shortpath } elseif ($cmd.commandtype -eq 'Alias' ` -and (is_ext_cmd $cmd.Definition)) { $cmd = $cmd.definition | shortpath } $cmd } rmalias type function global:type { try { which @args } catch { write-error $_ -ea stop } } function global:command { # Remove -v etc. for now. if ($args[0] -match '^-') { $null,$args = $args } try { which @args -commandtype application,externalscript } catch { write-error $_ -ea stop } } function ver_windows { $osver = [environment]::osversion.version $major = $osver.major $build = $osver.build if ($major -eq 10 -and $build -gt 22000) { $major = 11 } try { $arch = [System.Runtime.InteropServices.RuntimeInformation,mscorlib]::OSArchitecture } catch {} 'Windows {0} build {1}{2}' ` -f $major, $build, $(if ($arch) { " $arch" }) } function global:ver { if ($iswindows) { ver_windows } else { $uname_parts = $(if ($islinux) { 'sri' } elseif ($ismacos) { 'srm' } ).getenumerator() | %{ uname "-$_" } # Remove -xxx-xxx suffixes from kernel versions. if ($islinux) { $uname_parts[1] = $uname_parts[1] -replace '-.*','' } "{0} kernel {1} {2}" -f $uname_parts } } function global:mklink { $usage = 'args: [link] target' $args = $args | %{ $_ } | ? length if (-not $args) { $args = @($input) } while ($args.count -gt 2 -and $args[0] -match '^/[A-Z]$') { $null,$args = $args } if (-not $args -or $args.count -gt 2) { write-error $usage -ea stop } $link,$target = $args if (-not $target) { $target = $link if (-not (split-path -parent $target)) { write-error ($usage + "`n" + 'cannot make link with the same name as target') -ea stop } $link = split-path -leaf $target } if (-not ($link_parent = split-path -parent $link)) { $link_parent = get-location | % path } if (-not ($target_parent = split-path -parent $target)) { $target_parent = get-location | % path } $link_parent = try { $link_parent | resolve-path -ea stop | % path } catch { write-error $_ -ea stop } if (-not (resolve-path $target -ea ignore)) { write-warning "target '${target}' does not yet exist" } $absolute = @{ link = join-path $link_parent (split-path -leaf $link) target = join-path $target_parent (split-path -leaf $target) } $home_dir_re = [regex]::escape($home) $dir_sep_re = [regex]::escape($dir_sep) $in_home = @{} $absolute.getenumerator() | %{ if ($_.value -match ('^'+$home_dir_re+"($dir_sep_re"+'|$)')) { $in_home[$_.key] = $true } } # If target is in home, make sure ~ is resolved. # # Make sure relative links are relative to link parent # (::ispathrooted() does not understand ~ paths and considers # them relative.) # # And if both link and target are in home dir, force relative # link, this is to make backups/copies/moves and SMB shares of # the home/profile dir easier and less error-prone. $target = if (-not ( $in_home.target ` -or [system.io.path]::ispathrooted($target) ) -or $in_home.count -eq 2) { pushd $link_parent resolve-path -relative $absolute.target popd } else { $absolute.target } if (-not $iswindows -or $psversiontable.psversion.major -ge 6) { # PSCore. try { new-item -itemtype symboliclink $absolute.link ` -target $target -ea stop } catch { write-error $_ -ea stop } } else { # WinPS or older. $params = @( if (test-path -pathtype container $target) { '/D' } ) cmd /c mklink @params $absolute.link $target if (-not $?) { write-error "exited: $lastexitcode" -ea stop } } } function global:rmlink { $args = @($input),$args | %{ $_ } | ? length if (-not $args) { write-error 'args: link1 [link2 ...]' -ea stop } $args | %{ try { $_ = gi $_ -ea stop } catch { write-error $_ -ea stop } if (-not $_.target) { write-error "$_ is not a symbolic link" -ea stop } if ((test-path -pathtype container $_) ` -and $iswindows ` -and $psversiontable.psversion.major -lt 7) { # In WinPS remove-item does not work for dir links. cmd /c rmdir $_ if (-not $?) { write-error "exited: $lastexitcode" -ea stop } } else { try { ri $_ } catch { write-error $_ -ea stop } } } } # Find neovim or vim and set $env:EDITOR, prefer neovim. if ($iswindows) { $vim = '' $locs = { (get-command nvim.exe @args).source }, { resolve-path /tools/neovim/nvim*/bin/nvim.exe @args }, { (get-command vim.exe @args).source }, { (get-command vim.bat @args).source }, { resolve-path /tools/vim/vim*/vim.exe @args } foreach ($loc in $locs) { if ($vim = &$loc -ea ignore) { break } } if ($vim) { set-alias vim -value $vim -scope global if ($vim -match 'nvim') { set-alias nvim -value $vim -scope global } $env:EDITOR = realpath $vim } } else { $env:EDITOR = 'vim' } # Windows PowerShell does not support the `e special character # sequence for Escape, so we use a variable $e for this. $e = [char]27 if ($iswindows) { function global:pgrep($pat) { if (-not $pat) { $pat = $($input) } get-ciminstance win32_process -filter "name like '%${pat}%' OR commandline like '%${pat}%'" | select ProcessId,Name,CommandLine } function global:pkill($proc) { if (-not $proc) { $proc = $($input) } if ($pid = $proc.ProcessId) { stop-process $pid } else { pgrep $proc | %{ stop-process $_.ProcessId } } } function format-eventlog { $input | %{ ("$e[95m[$e[34m" + ('{0:MM-dd} ' -f $_.timecreated) ` + "$e[36m" + ('{0:HH:mm:ss}' -f $_.timecreated) ` + "$e[95m]$e[0m " ` + $_.message) | out-string } } function global:syslog { get-winevent -log system -oldest | format-eventlog | less } # You have to enable the tasks log first as admin, see: # https://stackoverflow.com/q/13965997/262458 function global:tasklog { get-winevent 'Microsoft-Windows-TaskScheduler/Operational' ` -oldest | format-eventlog | less } function global:ntop { ntop.exe -s 'CPU%' @args if (-not $?) { write-error "exited: $lastexitcode" -ea stop } } function head_tail([scriptblock]$cmd, $arglist) { $lines = if ($arglist.length -and $arglist[0] -match '^-(.+)') { $null,$arglist = $arglist $matches[1] } else { 10 } if (!$arglist.length) { $input | &$cmd $lines } else { gc $arglist | &$cmd $lines } } function global:head { $input | head_tail { $input | select -first @args } $args } function global:tail { $input | head_tail { $input | select -last @args } $args } function global:touch { if (-not $args) { $args = $input } $args | %{ $_ } | %{ if (test-path $_) { (gi $_).lastwritetime = get-date } else { ni $_ | out-null } } } function global:sudo { $cmd = [management.automation.invocationinfo].getproperty('ScriptPosition', [reflection.bindingflags] 'instance, nonpublic').getvalue($myinvocation).text -replace '^\s*sudo\s*','' ssh localhost -- "sl '$(get-location)'; $cmd" } function global:nproc { [environment]::processorcount } # To see what a choco shim is pointing to. function global:readshim { if (-not $args) { $args = $input } $args | %{ $_ } | %{ get-command $_ -commandtype application ` -ea ignore } | %{ $_.source } | ` # WinGet symlinks %{ if ($link_target = (gi $_).target) { $link_target | shortpath } # Scoop shims elseif (test-path ($shim = $_ -replace '\.exe$','.shim')) { gc $shim | %{ $_ -replace '^path = "([^"]+)"$','$1' } | shortpath } # Chocolatey shims elseif (&$_ --shimgen-help) { $_ | ?{ $_ -match "^ Target: '(.*)'$" } ` | %{ $matches[1] } | shortpath } } } function global:env { gci env: | sort name | %{ "`${{env:{0}}}='{1}'" -f $_.name,$_.value } } # Tries to reset the terminal to a sane state, similar to the Linux reset # binary from ncurses-utils. function global:reset { [char]27 + "[!p" clear-host } if ((test-path ~/.tmux-pwsh.conf) -and (test-path /msys64/usr/bin/tmux.exe)) { function global:tmux { /msys64/usr/bin/tmux -f ~/.tmux-pwsh.conf @args } } elseif ((gcm -ea ignore wsl) -and (wsl -- ls '~/.tmux-pwsh.conf' 2>$null)) { function global:tmux { wsl -- tmux -f '~/.tmux-pwsh.conf' @args } } } elseif ($ismacos) { function global:ls { if (-not $args) { $args = $input } &(command ls) -Gh @args if (-not $?) { write-error "exited: $lastexitcode" -ea stop } } } elseif ($islinux) { function global:ls { if (-not $args) { $args = $input } &(command ls) --color=auto -h @args if (-not $?) { write-error "exited: $lastexitcode" -ea stop } } } if (-not (test-path function:global:grep) ` -and (get-command -commandtype application grep -ea ignore) ` -and ('foo' | ext_cmd_works (command grep) --color foo)) { function global:grep { $input | &(command grep) --color @args if (-not $?) { write-error "exited: $lastexitcode" -ea stop } } } rmalias gl rmalias pwd function global:gl { get-location | % path | shortpath } function global:pwd { get-location | % path | shortpath } function global:ltr { $input | sort lastwritetime } function global:count { $input | measure | % count } # Example utility function to convert CSS hex color codes to rgb(x,x,x) color codes. function global:hexcolortorgb { if (-not ($color = $args[0])) { $color = $($input) } 'rgb(' + ((($args[0] -replace '^(#|0x)','' -split '(..)(..)(..)')[1,2,3] | %{ [uint32]"0x$_" }) -join ',') + ')' } function map_alias { $input | %{ $_.getenumerator() | %{ $path = $_.value # Expand any globs in path. if ($parent = split-path -parent $path) { if ($parent = resolve-path $parent -ea ignore) { $path = join-path $parent (split-path -leaf $path) } else { return } } if ($cmd = get-command $path -ea ignore) { rmalias $_.key $type = $cmd.commandtype $cmd = if ($type ` -cmatch '^(Application|ExternalScript)$') { $cmd.source } elseif ($type -cmatch '^(Cmdlet|Function)$') { $cmd.name } else { throw "Cannot alias command of type '$type'." } set-alias $_.key -value $cmd -scope global } }} } if ($iswindows) { @{ patch = '/prog*s/git/usr/bin/patch' wordpad = '/prog*s/win*nt/accessories/wordpad' ssh = '/prog*s/OpenSSH-*/ssh.exe' '7zfm' = '/prog*s/7-zip/7zfm.exe' } | map_alias } # Alias the MSYS2 environments if MSYS2 is installed. if ($iswindows -and (test-path /msys64)) { function global:msys2 { $env:MSYSTEM = 'MSYS' /msys64/usr/bin/bash -l $(if ($args) { '-c',"$args" }) ri env:MSYSTEM } function global:msys { $env:MSYSTEM = 'MSYS' /msys64/usr/bin/bash -l $(if ($args) { '-c',"$args" }) ri env:MSYSTEM } function global:clang64 { $env:MSYSTEM = 'CLANG64' /msys64/usr/bin/bash -l $(if ($args) { '-c',"$args" }) ri env:MSYSTEM } function global:ucrt64 { $env:MSYSTEM = 'UCRT64' /msys64/usr/bin/bash -l $(if ($args) { '-c',"$args" }) ri env:MSYSTEM } function global:mingw64 { $env:MSYSTEM = 'MINGW64' /msys64/usr/bin/bash -l $(if ($args) { '-c',"$args" }) ri env:MSYSTEM } function global:mingw32 { $env:MSYSTEM = 'MINGW32' /msys64/usr/bin/bash -l $(if ($args) { '-c',"$args" }) ri env:MSYSTEM } } $cmds = @{} foreach ($cmd in 'perl','diff','colordiff','tac') { $cmds[$cmd] = try { get-command -commandtype application,externalscript $cmd ` -ea ignore | select -first 1 | % source } catch { $null } } # For diff on Windows install diffutils from choco. # # Clone git@github.com:daveewart/colordiff to ~/source/repos # for colors. if ($cmds.diff) { rmalias diff rmalias colordiff $cmd = $clone = $null $prepend_args = @() function global:diff { $args = $prepend_args,$args $rc = 2 @( $input | &$cmd @args; $rc = $lastexitcode ) | less -Q -r -X -F -K --mouse if ($rc -ge 2) { write-error "exited: $rc" -ea stop } } $cmd = if ($cmds.colordiff) { $cmds.colordiff } elseif ($cmds.perl -and ($clone = resolve-path ` ~/source/repos/colordiff/colordiff.pl ` -ea ignore)) { $prepend_args = @($clone) $cmds.perl } else { $cmds.diff } if ($cmds.colordiff -or $clone) { set-alias -scope global colordiff -value diff } } @{ vcpkg = '~/source/repos/vcpkg/vcpkg' } | map_alias if (-not $cmds.tac) { function global:tac { $file = if ($args) { gc $args } else { @($input) } $file[($file.count - 1) .. 0] } } # Aliases to pwsh Cmdlets/functions. set-alias s -value select-object -scope global # Remove duplicates from $env:PATH. $env:PATH = (split_env_path | select -unique) -join $path_sep } | import-module # This is my posh-git prompt theme: if (get-module -listavailable posh-git-theme-bluelotus) { import-module posh-git-theme-bluelotus # If you want the posh-git window title, uncomment this: # # $gitpromptsettings.windowtitle = # $gitprompt_theme_bluelotus.originalwindowtitle; } elseif (get-command oh-my-posh -ea ignore) { oh-my-posh --init --shell pwsh ` --config 'https://raw.githubusercontent.com/JanDeDobbeleer/oh-my-posh/main/themes/paradox.omp.json' | iex } if (-not (get-module posh-git) ` -and (get-module -listavailable posh-git)) { import-module posh-git } if (-not (get-module psreadline)) { import-module psreadline } set-psreadlineoption -editmode emacs set-psreadlineoption -historysearchcursormovestoend set-psreadlineoption -bellstyle none set-psreadlinekeyhandler -key tab -function complete set-psreadlinekeyhandler -key uparrow -function historysearchbackward set-psreadlinekeyhandler -key downarrow -function historysearchforward set-psreadlinekeyhandler -chord 'ctrl+spacebar' -function menucomplete set-psreadlinekeyhandler -chord 'alt+enter' -function addline if ($private:posh_vcpkg = ` resolve-path ~/source/repos/vcpkg/scripts/posh-vcpkg ` -ea ignore) { import-module $posh_vcpkg } if ($private:src = ` resolve-path $ps_config_dir/private-profile.ps1 ` -ea ignore) { $global:profile_private = $src | shortpath . $profile_private } # vim:set sw=4 et:

. This profile works for «Windows PowerShell» as well. But the profile

is in a different file, so you will need to make a symlink there to

your PowerShell $profile.

mkdir ~/Documents/WindowsPowerShell ni -it sym ~/Documents/WindowsPowerShell/Microsoft.PowerShell_profile.ps1 -tar $profile

. Be aware that if your Documents are in OneDrive, OneDrive will

ignore and not sync symlinks.

This $profile also works for PowerShell for Linux and macOS.

The utility functions it defines are described here.

Setting up ssh

To make sure the permissions are correct on the files in your

~/.ssh directory, run the following:

&(resolve-path /prog*s/openssh*/fixuserfilepermissions.ps1) import-module -force (resolve-path /prog*s/openssh*/opensshutils.psd1) repair-authorizedkeypermission -file ~/.ssh/authorized_keys

.

Setting up and Using Git

Git Setup

You can copy over your ~/.gitconfig and/or run the following to

set some settings I recommend:

# SET YOUR NAME AND EMAIL HERE: git config --global user.name "John Doe" git config --global user.email johndoe@example.com git config --global core.autocrlf false git config --global push.default simple git config --global pull.rebase true git config --global commit.gpgsign true

.

Using Git

Git usage from PowerShell is pretty much the same as on Linux, with

a couple of caveats.

Arguments containing special characters like : or . must be

quoted, for example:

git tag -s 'v5.41' -m'v5.41' git push origin ':refs/heads/some-branch'

. The .git directory is hidden, to see it use:

. NEVER run the command:

. On Linux, the * glob does match dot files like .git, but on

Windows it matches everything.

The command:

, is safe because hidden files like .git are not affected without -Force.

Because .git is a hidden directory, this also means that to delete a cloned repository, you must pass -Force to Remove-Item, e.g.:

.

Dealing with Line Endings

With core.autocrlf set to false, the files in your checkouts

will have UNIX line endings, but occasionally you need a project to

have DOS line endings, for example if you use PowerShell scripts to

edit the files in the project. In this case, it’s best to make a

.gitattributes file in the root of your project and commit it,

containing for example:

. Make sure to add exclusions for all binary file types you need.

This way, anyone cloning the repo will have the correct line

endings.

Setting up gpg

Make this symlink:

sl ~ mkdir .gnupg -ea ignore cmd /c rmdir (resolve-path ~/AppData/Roaming/gnupg) ni -it sym ~/AppData/Roaming/gnupg -tar (resolve-path ~/.gnupg)

. Then you can copy your .gnupg over, without the socket files.

To configure git to use it, do the following:

git config --global commit.gpgsign true git config --global gpg.program 'C:\Program Files (x86)\GnuPG\bin\gpg.exe'

.

Profile (Home) Directory Structure

Your Windows profile directory, analogous to a UNIX home directory,

will usually be something like C:\Users\username, it may be on a

server share if you are using a domain in an organization.

The automatic PowerShell variable $home will contain the path to

your profile directory as well as the environment variable

$env:USERPROFILE. You can use the environment variable in things

such as Explorer using the cmd syntax, e.g. try entering

%USERPFOFILE% in the Explorer address bar.

The ~/AppData directory is analogous to the Linux ~/.config

directory, except it has two parts, Local and Roaming. The

Roaming directory may be synced by various things across your

computers, and the Local directory is generally intended for your

specific computer configurations.

It is up to any particular application whether it uses the Local

or Roaming directory, or both, and for what. When backing up any

particular application configuration, check if it uses one or the

other or both.

The install

script makes a

~/.config symlink pointing to ~/AppData/Local. This is adequate

for some Linux ports such as Neovim.

There is one other important difference you must be aware of. When

you uninstall an application on Windows, it will often DELETE

its configuration directory or directories under ~/AppData. This

is one reason why in this guide I give instructions for making a

directory under your $home and symlinking the AppData directory

to it. Make sure you backup your terminal settings.json for this

reason as well.

PowerShell Usage Notes

Introduction

PowerShell is very different from POSIX shells, in both usage and

programming.

This section won’t teach you PowerShell, but it will give you enough

information to use it as a shell, write basic scripts and be a

springboard for further exploration.

You must be aware that when PowerShell is discussed, there are two

versions that are commonly used, Windows PowerShell or WinPS for

short, and PowerShell Core or PSCore for short.

Windows PowerShell is the standard powershell command in Windows,

and you can rely on it being installed on any Windows system. It is

currently version 5.1 of PowerShell with some extra patches and

backported security fixes by Microsoft.

PowerShell Core is the latest release from the open source

PowerShell project. Currently this is 7.2.1 but will almost

certainly be higher when you are reading this. If installed, it will

be available in $env:PATH as the pwsh command.

You can see your PowerShell version with:

. Everything in this guide is compatible with both versions, except

when I explicitly state that it isn’t.

WinPS is not as nice for interactive use as PSCore, but for writing

scripts and modules you have just about all the facilities of the

language available, except for a few new features like ternaries

which I won’t cover here. I recommend targeting WinPS for any

scripts or modules that you will write.

Finding Documentation

You can get a list of aliases with alias and lookup specific

aliases with e.g. alias ri. It allows globs, e.g. to see aliases

starting with s do alias s*.

You can get help text for any Cmdlet via its long name or alias with

help -full <Cmdlet>. To use less instead of the default pager,

do e.g.: help -full gci | less.

In the $profile, less is set to the

default pager for help via $env:PAGER, and -full is enabled by

default via $PSDefaultParameterValues.

You can use tab completion to find help topics and search for

documentation using globs, for example to see a list of articles

containing the word «where»:

. The conceptual documentation not related to a specific command or

function takes the form about_XXXXX e.g. about_Operators,

modules you install will often also have such a document, to see a

list do:

. Run update-help once in a while to update all your help files.

You can get documentation for external utilities in this way:

. For documentation for cmd builtins, you can do this:

. For the git man pages, use git help <command> to open the man

page in your browser, e.g.:

.

Commands, Parameters and Environment

I suggest using the short forms of PowerShell aliases instead of the

POSIX aliases, this forces your brain into PowerShell mode so you

will mix things up less often, with the exception of a couple of

things that are easier to type like mkdir, ps or kill or some

of the wrappers in the $profile.

Here are a few:

| PowerShell alias | Full Cmdlet + Params | POSIX command |

|---|---|---|

| sl | Set-Location | cd |

| gl | Get-Location | pwd |

| gci -n | Get-ChildItem -Name | ls |

| gci | Get-ChildItem | ls -l |

| gi | Get-Item | ls -ld |

| cpi | Copy-Item | cp -r |

| ri | Remove-Item | rm |

| ri -fo | Remove-Item -Force | rm -f |

| ri -r -fo | Remove-Item -Force -Recurse | rm -rf |

| gc | Get-Content | cat |

| mi | Move-Item | mv |

| mkdir | New-Item -ItemType Directory | mkdir |

| which (custom) | Get-Command | command -v, which |

| gci -r | Get-ChildItem -Recurse | find |

| gci -dir | Get-ChildItem -Directory | find -type d |

| ni | New-Item | touch |

| sls -ca | Select-String -CaseSensitive | grep |

| sls | Select-String | grep -i |

| gci -r | sls -ca | Get-ChildItem -Recurse |

| sort | Sort-Object | sort |

| sort -u | Sort-Object -Unique | sort -u |

| measure -l | Measure-Object -Line | wc -l |

| measure -w | Measure-Object -Word | wc -w |

| measure -c | Measure-Object -Character | wc -m |

| gc file | select -first 10 | Get-Content file | Select-Object -First 10 | head -n 10 file |

| gc file | select -last 10 | Get-Content file | Select-Object -Last 10 | tail -n 10 file |

| gc -wait -tail 20 some.log | Get-Content -Wait -Tail 20 some.log | tail -f -n 20 some.log |

| iex | Invoke-Expression | eval |

. This will get you around and doing stuff, the usage is slightly

different however.

For one thing commands like cpi (Copy-Item) take a list of files

differently from POSIX, they must be a PowerShell list, which means

separated by commas. For example, to copy file1 and file2 to

dest-dir, you would do:

. To remove file1 and file2 you would do:

. You can list multiple globs in these lists as well as files and

directories etc., for example:

. Note that globs in PowerShell are case-insensitive.

Also, unlike Linux, the * glob will match all files including

.dotfiles. Windows uses a different mechanism for hidden files,

see below.

PowerShell relies very heavily on tab completion, and just about

everything can be tab completed. The style I present here uses short

forms and abbreviations instead, when possible.

Tab completing directories and files with spaces in them can be

annoying, for example:

, will show the completion C:\Program. If you want to complete

C:\Program Files type `<SPACE> and it will be completed with

a starting quote. More on the ` escape character later.

For completing /Program Files it’s easier to use DOS short alias

/progra~1 and for /Program Files (x86) the /progra~2 alias.

The $profile defines the variable

$ps_history for the command history file location which is

analogous to ~/.bash_history on Linux, you can view it with e.g.:

. Command-line editing and history search works about the same way

as in bash. I have also defined the PSReadLine options to make up

arrow not only cycle through previous commands, but will also allow

you to type the beginning of a previous command and cycle through

matches.

For examining variables and objects, unlike in POSIX shells, a value

will be formatted for output implicitly and you do not have to

echo it, to write a message you can just use a string, to examine

a variable you can just input it directly, for example:

'Operation was successful!' "The date today is: {0}" -f (get-date) $profile $env:PAGER

. As you can see here, there is a difference between normal

variables and environment variables, which are prefixed with env:,

which is a PSDrive, more on that later.

Many commands you will use in PowerShell will, in fact, yield

objects that will use the format defined for them to present

themselves on the terminal. For example gci or gi. You can

change these formats too.

The Cmdlet Get-Command will tell you the type of a command, like

type on bash. To get the path of an executable use, e.g.:

. The $profile which,type and

command wrappers do this automatically.

Values, Arrays and Hashes

One very nice feature of PowerShell is that it very often allows you

to use single values and arrays interchangeably. Arrays are created

by using the , comma operator to list multiple values, or

assigning the result of a command that returns multiple values.

$val = 'a string' $val.count # 1 $arr = 'foo',,'bar','baz' $arr.count # 3 $val | %{ $_.toupper() } # A STRING ($arr | %{ $_.toupper() }) -join ',' # FOO,BAR,BAZ $repos = gci ~/source/repos $repos.count # 29

. You usually do not have to do anything to work with an array value

as opposed to a single value, but sometimes it is very useful to

enclose values or commands in @(...) to coerce the result to an

array. This will also exhaust any iterator-like objects such as

$input into an immediate array value. $(...) will have the same

effect, but it will not coerce single values to an array.

Occasionally you may want to write a long pipeline directly to a

variable, you can use set-variable which has the standard alias

sv to do this, for example:

gci /windows/system32/*.dll | % fullname | sv dlls $dlls.count # 3652

. Hashes can be defined and used like so:

$hash = @{ foo = 'bar' bar = 'baz' } $hash.foo # bar $hash.bar # baz $hash['foo'] # bar $hash.keys -join ',' # foo,bar $hash.values -join ',' # bar,baz $hash.getenumerator() | %{ "{0} = '{1}'" -f $_.key,$_.value } # foo = 'bar' # bar = 'baz'

. To make an ordered hash do:

$ordered_hash = [ordered]@{ some = 'val' other = 'val2' }

.

Redirection, Streams, $input and Exit Codes

Redirection for files and commands works like in POSIX shells on a

basic level, that is, you can expect >, >> and | to redirect

files and commands like you would expect, for TEXT data. LF

line ends will also generally get rewritten to CRLF, and sometimes

an extra CRLF will be added to the end of the file/stream. See

here for some ways to deal with this

in git repos. You can also adjust line endings with the dos2unix

and unix2dos commands.

The > redirection operator is a shorthand for the Out-File

command.

DO NOT redirect binary data, instead have the utility you are

using write the file directly.

The < operator is not yet available.

The streams 1 and 2 are SUCCESS and ERROR, they are

analogous to the STDOUT and STDERR file descriptors, and

generally work similarly and support the same redirection syntax.

PowerShell has many other streams, see:

help about_output_streams

. There is no analogue to the STDIN stream. This gets quite

complex because the pipeline paradigm is central in PowerShell.

For example, text data is generally broken up into string objects

for each line. If you pipe to out-string they will be combined

into one string object. Here is an illustration:

get-content try.ps1 | invoke-expression # Throws various syntax errors. get-content try.ps1 | out-string | invoke-expression # Works correctly.

, there are many ways to handle pipeline input, the simplest and

least reliable is the automatic variable $input, I have used it in

the $profile for many things. Here is a

stupid example:

function capitalize_foo { $input | %{ $_ -replace 'foo','FOO' } }

. If you want to test for the presence of pipeline input, you can

use $myinvocation.expectinginput, for example:

function got_pipeline { if ($myinvocation.expectinginput} { 'pipe' } else { 'no pipe' } }

. The equivalent of /dev/null is $null, so a command such as:

, would be:

. While a command such as:

cmd >/dev/null 2>&1 # Or, using a non-POSIX bash feature: cmd &>/dev/null

, would generally be written as:

, to silence all streams, including extra streams PowerShell has

such as Verbose. If you just want to suppress the output

(SUCCESS) stream, you would generally use:

. The ERROR stream also behaves quite differently from POSIX

shells.

Both external commands and PowerShell functions and cmdlets indicate

success or failure via $?, which is $true or $false. For

external commands the actual exit code is available via

$LastExitCode.

However, PowerShell commands use a different mechanism to indicate

an error status. They throw an exception or write an error to the

ERROR stream, which is essentially the same thing, just resulting

in different types of objects being written to the ERROR stream.

You can examine the error/exception objects in the $error array,

for example:

write-error 'Something bad happened.' $error[0]

Write-Error: Something bad happened.

PSMessageDetails : Exception : Microsoft.PowerShell.Commands.WriteErrorException: Something bad happened. ...

. As a consequence of both external commands and PowerShell

functions/cmdlets setting $?, when you wrap an external command

with a function, $? from the command execution will be reset by

the function return. The best workaround I found for this so far, is

to throw a short error like this:

function cmd_wrapper { cmd @args if (-not $?) { write-error "exited: $LastExitCode" -ea stop } }

. Now I must admit to lying to you previously, that is:

, is not the same thing as suppressing STDERR in sh, for example:

, will still set error status, even though you see no output, and

$error[0] will contain an empty error.

Even worse, this means that if you have:

$erroractionpreference = 'stop'

, your script will terminate.

For native commands, it does in effect suppress STDERR, because

they do not use this mechanism.

For PowerShell commands what you want to do instead is this:

mkdir existing-dir -ea ignore

, this sets ErrorAction to Ignore, and does not trigger an error

condition, and does not write an error object to ERROR.

Command/Expression Sequencing Operators

The operators ;, && and || will generally work how you expect

in sh, but there are some differences you should be aware of.

The ; operator can not only separate commands, but can also be

very useful to output multiple values (commands are also values.)

Both the ‘;’ and the ‘,’ operator will yield values, but sometimes

using the ‘,’ operator will limit the syntax you can use inside an

expression.

The ‘;’ operator will not work in a parenthesized expression, but

will work in value and array expressions $(...) and @(...). For

example:

# This will not work: (cmd; 'foo', 'bar') # This will work: $(cmd1; 'foo'; cmd2)

. The && and || operators are only available in PSCore, and their

semantics are different from what you would expect in sh and other

languages.

The do not work on $true/$false values, but on the $? variable

I described previously.

This variable is $true or $false based on whether the exit code

of an external command is zero or if a PowerShell function or cmdlet

executed successfully.

That is, this will not work:

, but things like this will work fine:

cmake && ninja || write-error 'build failed'

. As I mentioned previously, since this is a PSCore feature, I do

not recommend using it in scripts or modules intended to be

distributed by themselves.

Commands and Operations on Filesystems and Filesystem-Like Objects

The gci aka Get-ChildItem command is analogous to ls -l.

For ls -ltr use:

gci | sort lastwritetime # Or my alias: gci | ltr

. The command analogous to ls -1 would be:

, aka -Name, it will list only file/directory/object names as

strings, which can be useful for long names or to pipe name strings

only to another command.

Get-Child-Item (gci) and Get-Item (gi) do not only operate

on filesystem objects, but on many other kinds of objects. For

example, you can operate on registry values like a filesystem, e.g.:

gi HKLM:/SOFTWARE/Microsoft/Windows/CurrentVersion gci HKLM:/SOFTWARE/Microsoft/Windows/CurrentVersion | less

, here HKLM stands for the HKEY_LOCAL_MACHINE section of the

registry. HKCU stands for HKEY_CURRENT_USER.

You can go into these objects using sl (Set-Location) and work

with them similar to a filesystem. The properties displayed and

their contents will depend on the types of objects you are working

with.

You can get a list of «drive» type devices including actual drive

letters with:

. These also include variables, environment variables, functions and

aliases, and you can operate on them with Remove-Item, Set-Item,

etc..

For actual Windows filesystems, the first column in directory

listings from gci or gi is the mode or attributes of the object.

The positions of the letters will vary, but here is their meaning:

| Mode Letter | Attribute Set On Object |

|---|---|

| l | Link |

| d | Directory |

| a | Archive |

| r | Read-Only |

| h | Hidden |

| s | System |

. To see hidden files, pass -Force to gci or gi:

gci -fo gi -fo hidden-file

. The best way to manipulate these attributes is with the attrib

utility, for example, to make a file or directory hidden do:

attrib +h file gi -fo file

, -Force is required for gci and gi to access hidden

filesystem objects.

To make this file visible again, do:

. To make a symbolic link, do:

ni -it sym name-of-link -tar (resolve-path path-to-source)

. The alias ni is for New-Item. Make sure the path-to-source

is a valid absolute or relative path, you can use tab completion or

(resolve-path file) to ensure this. The source paths CAN NOT

contain the ~ $env:USERPROFILE shortcut because this is specific

to PowerShell and not to the Windows operating system.

You must turn on Developer Mode to be able to make symbolic links

without elevation in PowerShell Core.

In Windows PowerShell, you must be elevated to make symbolic links

whether Developer Mode is enabled or not. But you can use:

cmd /c mklink <link> <targeT>

, without elevation if Developer Mode is enabled.

WARNING: Do not use ri to delete a symbolic link to a

directory in Windows PowerShell, do this instead:

cmd /c rmdir symlink-to-directory

, ri dirlink works fine in PowerShell Core.

My $profile functions mklink and

rmlink handle all of these details for you and work in both

versions of PowerShell and other OSes. The syntax for mklink is

the same as the cmd command, but you do not need to pass /D for

directory links and the link is optional, the leaf of the target

will be used as the link name as a default.

For a find replacement, use the -Recurse flag to gci, e.g.:

.

To search under a specific directory, specify the glob with

-Include, e.g.:

, for example, to find all DLL files in all levels under

C:\Windows.

Another useful parameter for the file operation commands is

-Exclude, which also takes globs, e.g.:

gci ~/source/repos -exclude vcpkg gci -r /some/dir -exclude .*

.

Pipelines

PowerShell supports an amazing new system called the «object

pipeline», what this means is that you can pass objects around via

pipelines and inspect their properties, call methods on them, etc..

You’ve already seen some examples of this, and this is the central

paradigm in PowerShell for everything.

When you run a command in PowerShell from the terminal, there is an

implicit pipeline from the command to your terminal device, when the

objects from the command reach your terminal, the format objects for

terminal view are applied to them and they are printed.

Here is an example of using the object pipeline to recursively

delete all vim undo files:

. Here remove-item receives file objects from get-childitem and

deletes them.

To do the equivalent of a recursive grep you could do something

like:

sls -r *.[ch] | sls -ca void

. I prefer using ripgrep (rg command) for this purpose. To turn

off the highlighting in Select-String, use the

-noe(-NoEmphasis) flag. Be aware that Select-String will apply

an output format to its results and there will be extra blank lines

at the top and bottom among other things, so if you are going to use

them as text in a pipeline or redirect use the -raw flag.

If the Cmdlet works on files, they can be strings as well, for

example:

gc file-list | cpi -r -dest e:/backup

, copies the files and directories listed in file-list to a

directory on a USB stick.

Most commands can accept pipeline input, even ones you wouldn’t

expect to, for example:

split-path -parent $profile | sl

, will enter your Documents PowerShell directory.

The help documentation for commands will generally state if they

accept pipeline input or not.

You can access the piped-in input in your own functions as the

special $input variable, like in some of the functions in the

$profile. This is the worst way to do

this, it’s better to make an advanced function with a process block,

which I won’t cover here yet, but it is the most simple.

Here is a more typical example of a pipeline:

get-process | ?{ $_.name -notmatch 'svchost' } | %{ $_.name } | sort -u

. Here ?{ ... } is like filter/grep block while %{ ... } is like

an apply/map block.

In PowerShell pipelines you will generally be working with object

streams and their properties rather than lines of text. And, as I

mentioned, lines of text are actually string objects anyway. I will

describe a few tricks for doing this here.

You can use the % property shorthand to select a single property

from an object stream, for example:

, will do the same thing as gci -n. The input does not have to be

a stream of multiple objects, using this on a single object will

work just fine.

This will get the full paths of the files in a directory:

gci ~/source/pwsh/*.ps1 | % fullname

. This also works with ? aka Where-Object, which has parameters

mimicking PowerShell operators, allowing you to do things like this:

, which will show all filesystem objects less than 1000 bytes.

Or, for example:

get-process | ? name -match 'win'

. There are many useful parameters to the select aka

Select-Object command for manipulating object streams, including

-first and -last as you saw for the head/tail equivalents,

as well as -skip, -skiplast, -unique, -index, -skipindex

and -expand. The last one, -expand, will select a property from

the objects selected and further expand it for objects and arrays.

For a contrived example:

gci ~/Downloads/*.zip | sort length | select -skiplast 1 ` | select -last 1 | % fullname

, will give me the name of the second biggest .zip file in my

~/Downloads folder.

I have aliased Select-Object to s in the

$profile as many people do to save you

some typing.

If you want to inspect the properties available on an object and

their current values, you can use select * e.g.:

.

The Measure-Object Cmdlet

The equivalent of wc -l file to count lines is:

, while -w will count words and -c will count characters. You

can combine any of the three in one command, the output is a table.

To get just the number of lines, you can do this:

gc file | measure -l | % lines

. Note that if you are working with objects and not lines of text,

meaure -l will still do what you expect, but it’s better to do

something like:

gci | measure | % count # Or with my $profile function: gci | count

. This is essentially the same thing, because lines of text in

PowerShell pipelines are actually string objects, as I already

mentioned at least 3 times.

Sub-Expressions and Strings

The POSIX command substitution syntax allows inserting the result of

an expression in a string or in some other contexts, for example:

"This file contains $(gc README.md | measure -l | % lines) lines."

. Executing an external command is also an expression, that returns

string objects for the lines outputted, which gives you essentially

the same thing as POSIX command substitution.

The @( ... ) syntax works identically to the $( ... ) syntax to

evaluate expressions, however, it cannot be used in a string by

itself and will always result in an array even for one value.

When not inside a string, you can simply use parenthesis, and when

assigning to variables you need nothing at all, for example:

$date = get-date vim (gci -r *.ps1)

. For string values, it can be nicer to use formats, e.g.:

"This shade of {0} is the hex code #{1:X6}." -f 'Blue',13883343 "Today is: {0}." -f (get-date)

. See

here

for more about the -f format operator.

. Variables can also be interpolated in strings just like in POSIX

shells, for example:

$greeting = 'Hello' $name = 'Fred' "${greeting}, $name"

. In PowerShell, the backtick ` is the escape character, and you

can use it at the end of a line, escaping the line end as a line

continuation character. In regular expressions, the backslash \ is

the escape character, like everywhere else.

The backtick can also be used to escape nested double quotes, but

not single quotes, for example:

, PowerShell also allows escaping double and single quotes by using

two consecutive quote characters, for example:

"this ""is"" a test" 'this ''is'' a test'

. The backtick is also used for special character sequences, here are

some useful ones:

| Sequence | Character |

|---|---|

| `n | Newline |

| `r | Carriage Return |

| `b | Backspace |

| `t | Tab |

| `u{hex code} | Unicode Character by Hex Code Point |

| `e | Escape (not supported by «Windows PowerShell») |

| `0 | Null |

| `a | Alert (bell) |

.

For example, this will print an emoji between two blank lines,

indented by a tab:

.

Script Blocks and Scopes

A section of PowerShell code is usually represented by a Script

Block, a function is a Script Block, or any code between { ... }

braces, such as for %{ ... } aka ForEach-Object or ?{ ... }

aka Where-Object. Script Blocks have their own dynamic child

scope, that is new variables defined in them are not visible to the

parent scope, and are freed if and when the Script Block is

released.

Script Blocks can be assigned to variables and passed to functions,

like lambdas or function pointers in other languages. Unlike

lambdas, PowerShell does not have lexical closure semantics, it uses

dynamic scope. You can, however, use a module to get an effect

similar to closure semantics, I use this in the

$profile. For example:

new-module SomeName -script { ... code here ... } | import-module