I have GTX 1050ti on laptop and when I run torch.cuda.is_available() it returns False.

nvcc --version

C:\WINDOWS\system32>nvcc --version

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2020 NVIDIA Corporation

Built on Thu_Jun_11_22:26:48_Pacific_Daylight_Time_2020

Cuda compilation tools, release 11.0, V11.0.194

Build cuda_11.0_bu.relgpu_drvr445TC445_37.28540450_0

My nvidia-smi

Sun Feb 7 04:16:50 2021

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 461.40 Driver Version: 461.40 CUDA Version: 11.2 |

|-------------------------------+----------------------+----------------------+

| GPU Name TCC/WDDM | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 GeForce GTX 105... WDDM | 00000000:01:00.0 Off | N/A |

| N/A 41C P8 N/A / N/A | 78MiB / 4096MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| No running processes found |

+-----------------------------------------------------------------------------+

I have installed pytorch using both anaconda virtual env and going to the pytorch website and selecting CUDA version 11.0.

cc @ezyang @seemethere @malfet @walterddr @ngimel @peterjc123 @maxluk @nbcsm @guyang3532 @gunandrose4u @mszhanyi @skyline75489

If you’re a data scientist or software engineer working with deep learning frameworks, you’re likely familiar with PyTorch. PyTorch is a popular open-source machine learning library that provides a flexible and efficient platform for building and training deep neural networks. It’s known for its ease of use, dynamic computation graphs, and support for both CPU and GPU acceleration.

If you’re a data scientist or software engineer working with deep learning frameworks, you’re likely familiar with PyTorch. PyTorch is a popular open-source machine learning library that provides a flexible and efficient platform for building and training deep neural networks. It’s known for its ease of use, dynamic computation graphs, and support for both CPU and GPU acceleration.

One of the key benefits of using PyTorch is its ability to leverage GPU acceleration to speed up training and inference. However, if you’re running PyTorch on Windows 10 and you’ve installed a compatible CUDA driver and GPU, you may encounter an issue where torch.cuda.is_available() returns False. This can be frustrating, as it means that PyTorch is not able to use your GPU for acceleration.

In this article, we’ll explore some common causes of this issue and provide some troubleshooting steps to help you get PyTorch running on your GPU.

Table of Contents

- Check Your CUDA Version

- Check Your GPU Drivers

- Check Your Environment Variables

- Check Your PyTorch Installation

- Best Practices

- Conclusion

Check Your CUDA Version

The first thing to check is whether your version of CUDA is compatible with your GPU and PyTorch. PyTorch has specific requirements for the version of CUDA that it supports, and using an incompatible version can cause torch.cuda.is_available() to return False.

To check your CUDA version, you can run the following command in a command prompt or PowerShell window:

nvcc --version

This will display the version of CUDA that is installed on your system. You can then check the PyTorch documentation to see which versions of CUDA are supported by your version of PyTorch. If your version of CUDA is not supported, you will need to install a compatible version.

Check Your GPU Drivers

Another possible cause of torch.cuda.is_available() returning False is outdated or incompatible GPU drivers. PyTorch requires a compatible NVIDIA GPU driver to be installed in order to use GPU acceleration. If your driver is outdated or incompatible, PyTorch may not be able to detect your GPU.

To check your GPU driver version, you can run the following command in a command prompt or PowerShell window:

nvidia-smi

This will display information about your NVIDIA GPU, including the driver version. You can then check the NVIDIA website to see if there is a newer version of the driver available for your GPU. If there is, you should download and install it.

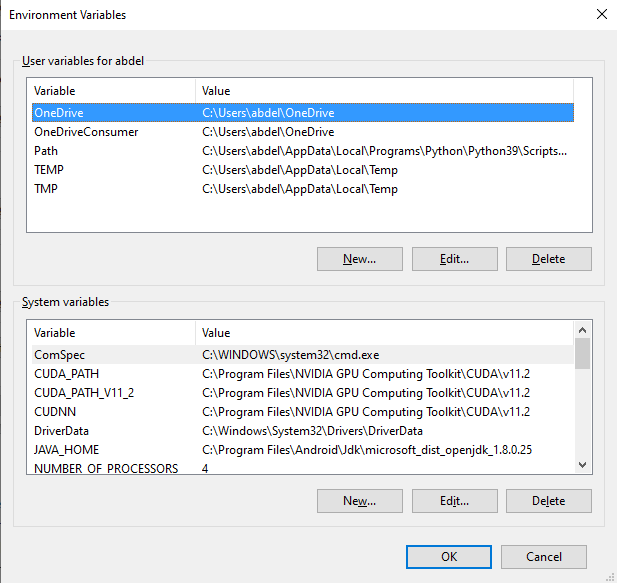

Check Your Environment Variables

PyTorch relies on several environment variables to locate the CUDA libraries and other dependencies. If these environment variables are not set correctly, PyTorch may not be able to detect your GPU.

To check your environment variables, you can open the Start menu and search for “Environment Variables”. This will open the System Properties window, where you can click the “Environment Variables” button.

In the Environment Variables window, you should see a list of system variables and user variables. Look for the following variables:

CUDA_PATH: This should be set to the path where CUDA is installed, such asC:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.0.CUDNN: This should be set to the path where the cuDNN library is installed, such asC:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.0\cuDNN\bin.

If any of these variables are missing or set incorrectly, you should update them to reflect the correct paths.

Check Your PyTorch Installation

Finally, it’s possible that there is an issue with your PyTorch installation itself. If PyTorch is not installed correctly or is missing dependencies, it may not be able to detect your GPU.

To check your PyTorch installation, you can run the following command in a Python shell:

import torch

print(torch.__version__)

This will display the version of PyTorch that is installed on your system. You can then check the PyTorch documentation to see if there are any known issues with your version of PyTorch. If there are, you may need to update or reinstall PyTorch.

Best Practices

If you want to ensure a smooth experience with PyTorch GPU acceleration on Windows 10 and minimize the chances of encountering issues with torch.cuda.is_available(), consider the following best practices:

Maintain Compatibility

- Regularly check and ensure that your PyTorch version is compatible with the CUDA version installed on your system. Refer to the PyTorch documentation for the recommended CUDA versions.

- Keep an eye on PyTorch releases and updates to stay current with the latest features and bug fixes.

Regularly Update GPU Drivers

- Check for and install the latest NVIDIA GPU drivers compatible with your GPU model. Outdated or incompatible drivers can lead to detection issues.

- Visit the official NVIDIA website periodically to check for driver updates and install them as needed.

Environment Variables Configuration

- Double-check and update the necessary environment variables, such as

CUDA_PATHandCUDNN, to point to the correct installation paths. Incorrect or missing environment variables can hinder PyTorch from detecting your GPU. - Ensure that the paths are set consistently with your CUDA installation.

Use Virtual Environments

- Consider working within a virtual environment for your PyTorch projects. Virtual environments help isolate dependencies and avoid conflicts that may arise from different project requirements.

- Use tools like

virtualenvorcondato create isolated environments for your projects.

Verify Dependencies Installation

- When setting up a new environment or installing PyTorch, double-check that all dependencies, including CUDA and cuDNN, are correctly installed.

- Follow the official installation guides provided by PyTorch and NVIDIA to ensure a proper setup.

Conduct System Checks

- Before starting a new project or making significant changes, run system checks to verify the compatibility of your CUDA version, GPU drivers, and PyTorch installation.

- Use the provided commands (

nvcc --version,nvidia-smi, andimport torch) to confirm that everything is in order.

Conclusion

If you’re experiencing issues with torch.cuda.is_available() returning False in Windows 10, there are several possible causes that you should investigate. By checking your CUDA version, GPU drivers, environment variables, and PyTorch installation, you can identify and resolve the issue so that you can take advantage of GPU acceleration in PyTorch.

Remember to always check the documentation for PyTorch and your GPU drivers to ensure compatibility and avoid any potential issues. With the right setup and troubleshooting steps, you can unlock the full potential of PyTorch for your deep learning projects.

About Saturn Cloud

Saturn Cloud is your all-in-one solution for data science & ML development, deployment, and data pipelines in the cloud. Spin up a notebook with 4TB of RAM, add a GPU, connect to a distributed cluster of workers, and more. Request a demo today to learn more.

Saturn Cloud provides customizable, ready-to-use cloud environments for collaborative data teams.

Try Saturn Cloud and join thousands of users moving to the cloud without

having to switch tools.

Torch CUDA is_available False: What It Means and How to Fix It

If you’re trying to use CUDA with PyTorch and get the error “CUDA is_available False”, don’t panic. This is a common error that can be fixed in a few simple steps.

In this article, we’ll explain what the error means and how to fix it. We’ll also provide some tips for troubleshooting CUDA-related issues.

So, if you’re seeing the “CUDA is_available False” error, read on for help!

What Does the Error Mean?

The “CUDA is_available False” error means that CUDA is not available on your system. This could be for a number of reasons, such as:

- You don’t have a CUDA-enabled GPU.

- Your CUDA driver is not up to date.

- You have not enabled CUDA in PyTorch.

How to Fix the Error

To fix the “CUDA is_available False” error, you need to identify the cause of the error and take steps to fix it. Here are a few things you can try:

- Check if you have a CUDA-enabled GPU. You can do this by looking at the specifications of your GPU. If your GPU does not have a CUDA-compatible architecture, you will not be able to use CUDA with PyTorch.

- Update your CUDA driver. You can download the latest CUDA driver from the NVIDIA website. Once you have installed the latest driver, restart your computer and try running your PyTorch code again.

- Enable CUDA in PyTorch. By default, PyTorch is not configured to use CUDA. You can enable CUDA by following the instructions in the [PyTorch documentation](https://pytorch.org/docs/stable/notes/cuda.htmlenabling-cuda).

Once you have taken steps to fix the cause of the error, you should be able to run your PyTorch code with CUDA.

Tips for Troubleshooting CUDA-Related Issues

If you’re still having trouble getting CUDA to work with PyTorch, here are a few tips that may help:

- Use the `–verbose` flag when running your PyTorch code. This will print out more information about what PyTorch is doing, which can help you identify the source of the problem.

- Use the `torch.cuda.is_available()` function to check if CUDA is available. This function will return a boolean value indicating whether or not CUDA is available on your system.

- Use the `torch.cuda.get_device_count()` function to get the number of CUDA-enabled GPUs on your system. This function will return an integer value indicating the number of GPUs that are available for use with CUDA.

By following these tips, you can troubleshoot CUDA-related issues and get your PyTorch code running with CUDA.

| Keyword | Data | Information |

|---|---|---|

| torch.cuda.is_available | False | CUDA is not available on this system. |

What does torch.cuda.is_available() do?

The `torch.cuda.is_available()` function checks whether a CUDA-enabled GPU is available on the system. If a CUDA-enabled GPU is available, the function returns `True`. Otherwise, the function returns `False`.

Why might torch.cuda.is_available() return False?

There are a few reasons why `torch.cuda.is_available()` might return `False`, even if a CUDA-enabled GPU is installed on the system.

- The CUDA driver is not installed. The CUDA driver is a software component that allows the operating system to communicate with the GPU. If the CUDA driver is not installed, `torch.cuda.is_available()` will return `False`, even if a CUDA-enabled GPU is installed.

- The CUDA toolkit is not installed. The CUDA toolkit is a collection of tools that allow developers to write CUDA programs. If the CUDA toolkit is not installed, `torch.cuda.is_available()` will return `False`, even if a CUDA-enabled GPU is installed and the CUDA driver is installed.

- The CUDA-enabled GPU is not enabled. By default, the CUDA-enabled GPU is not enabled on most systems. To enable the CUDA-enabled GPU, you must open the NVIDIA Control Panel and select Manage 3D Settings. Under the Program Settings tab, select the Add button and browse to the location of the Torch executable. In the Select a program to customize window, select Torch and click OK. In the Select the preferred graphics processor window, select High-performance NVIDIA processor and click OK.

- The CUDA-enabled GPU is not being used by the current process. By default, the CUDA-enabled GPU is used by the first process that requests it. If you are running multiple processes, and you want to use the CUDA-enabled GPU with a specific process, you must set the `CUDA_VISIBLE_DEVICES` environment variable. To do this, open a terminal window and type the following command:

export CUDA_VISIBLE_DEVICES=0

where `0` is the index of the CUDA-enabled GPU that you want to use.

In this article, we discussed the `torch.cuda.is_available()` function. We learned that this function checks whether a CUDA-enabled GPU is available on the system. We also discussed some of the reasons why `torch.cuda.is_available()` might return `False`, even if a CUDA-enabled GPU is installed on the system.

What can you do if torch.cuda.is_available() returns False?

If torch.cuda.is_available() returns False, it means that CUDA is not available on your system. There are a few things you can do to try to fix this:

- Check your CUDA installation. Make sure that you have installed the correct version of CUDA for your operating system. You can find the latest CUDA drivers for Windows, macOS, and Linux on the NVIDIA website.

- Make sure that CUDA is enabled in your PyTorch installation. By default, PyTorch is not compiled with CUDA support. You can enable CUDA support by following the instructions in the PyTorch documentation.

- Check your CUDA device. Make sure that you have a CUDA-compatible GPU installed on your system. You can check your GPU’s CUDA compatibility by running the following command:

nvidia-smi

If your GPU is not CUDA-compatible, you will need to purchase a new GPU or use a different machine that has a CUDA-compatible GPU.

If you have tried all of these steps and CUDA is still not available, you may need to contact NVIDIA support for help.

How to check if CUDA is available on Windows, macOS, and Linux?

To check if CUDA is available on your system, you can use the following commands:

Windows:

nvcc –version

macOS:

/usr/local/cuda/bin/nvcc –version

Linux:

/usr/local/cuda/bin/nvcc –version

If CUDA is installed and available, you will see a version number printed to the console. If CUDA is not installed or available, you will see an error message.

Here are some additional resources that you may find helpful:

- [NVIDIA’s CUDA documentation](https://docs.nvidia.com/cuda/)

- [PyTorch’s documentation on CUDA support](https://pytorch.org/docs/stable/cuda.html)

- [The official PyTorch forum](https://discuss.pytorch.org/)

In this tutorial, we learned how to check if CUDA is available on Windows, macOS, and Linux. We also learned what to do if torch.cuda.is_available() returns False.

If you have any other questions about CUDA or PyTorch, please feel free to ask in the comments below.

Q: What does it mean when `torch.cuda.is_available()` returns False?

A: This means that there is no CUDA-capable device available on your system. This could be because you don’t have a CUDA-capable GPU, or because your CUDA driver is not installed correctly.

Q: How can I check if I have a CUDA-capable GPU?

A: You can check if you have a CUDA-capable GPU by running the following command:

nvidia-smi

This will list all of the GPUs on your system, and will indicate if they are CUDA-capable.

Q: How can I install the CUDA driver?

A: You can install the CUDA driver from the NVIDIA website.

Q: How can I use CUDA with PyTorch?

A: To use CUDA with PyTorch, you need to first install the CUDA driver. Once the driver is installed, you can enable CUDA in PyTorch by setting the `CUDA_VISIBLE_DEVICES` environment variable. For example, to use the first CUDA device, you would set the environment variable to `CUDA_VISIBLE_DEVICES=0`.

Q: I’m getting an error when I try to use CUDA with PyTorch. What should I do?

A: If you’re getting an error when you try to use CUDA with PyTorch, there are a few things you can check. First, make sure that you have installed the CUDA driver correctly. Second, make sure that you have enabled CUDA in PyTorch. Finally, make sure that you are using a CUDA-compatible version of PyTorch.

Q: I’m still having trouble using CUDA with PyTorch. Can you help me?

A: If you’re still having trouble using CUDA with PyTorch, you can post a question on the PyTorch forums or the PyTorch Discord server. There are also a number of resources available online that can help you troubleshoot CUDA problems.

In this blog post, we discussed how to troubleshoot the error torch.cuda.is_available() is False. We first introduced the causes of this error and then provided several solutions to fix it. We hope that this blog post has been helpful and that you are now able to successfully use PyTorch with CUDA.

Here are some key takeaways from this blog post:

- The error torch.cuda.is_available() is False can occur for a variety of reasons, including:

- CUDA is not installed on your system.

- The CUDA version that is installed on your system is not compatible with the version of PyTorch that you are using.

- The CUDA driver that is installed on your system is not compatible with the version of PyTorch that you are using.

- To troubleshoot this error, you can try the following solutions:

- Check to make sure that CUDA is installed on your system.

- Check to make sure that the CUDA version that is installed on your system is compatible with the version of PyTorch that you are using.

- Check to make sure that the CUDA driver that is installed on your system is compatible with the version of PyTorch that you are using.

- Try using a different version of PyTorch.

- Try using a different CUDA driver.

If you are still having trouble after trying these solutions, you can consult the PyTorch documentation or the PyTorch forums for more help.

Author Profile

-

Hatch, established in 2011 by Marcus Greenwood, has evolved significantly over the years. Marcus, a seasoned developer, brought a rich background in developing both B2B and consumer software for a diverse range of organizations, including hedge funds and web agencies.

Originally, Hatch was designed to seamlessly merge content management with social networking. We observed that social functionalities were often an afterthought in CMS-driven websites and set out to change that. Hatch was built to be inherently social, ensuring a fully integrated experience for users.

Now, Hatch embarks on a new chapter. While our past was rooted in bridging technical gaps and fostering open-source collaboration, our present and future are focused on unraveling mysteries and answering a myriad of questions. We have expanded our horizons to cover an extensive array of topics and inquiries, delving into the unknown and the unexplored.

Latest entries

2025-04-26

What it does

- Returns a boolean

It returnsTrueif your PyTorch build includes CUDA support, andFalseotherwise. - Checks for CUDA build

This function checks if the PyTorch library you’re using was compiled with the necessary components to utilize CUDA. CUDA is a parallel computing platform and API developed by NVIDIA that allows you to use their GPUs for accelerated computing.

Why it matters

- Debugging

When setting up your environment or troubleshooting GPU-related problems, this function can be a valuable diagnostic tool. - Error prevention

If you try to run GPU-accelerated code with a CPU-only PyTorch build, you’ll encounter errors. Usingtorch.backends.cuda.is_built()beforehand can help you avoid such issues. - GPU availability

Before you try to run any PyTorch code that uses your GPU, you need to know if CUDA support is actually included in your PyTorch installation. This function helps you confirm that.

Important Note

- Built, not necessarily available

Even iftorch.backends.cuda.is_built()returnsTrue, it doesn’t guarantee that CUDA is actually available on your system. You still need to have a compatible NVIDIA GPU, the correct drivers, and the CUDA toolkit installed. You can further check if CUDA is truly available usingtorch.cuda.is_available().

import torch

if torch.backends.cuda.is_built():

print("PyTorch was built with CUDA support!")

if torch.cuda.is_available():

print("CUDA is also available on this machine!")

# Proceed with GPU-accelerated code

else:

print("CUDA is built in but not available on this machine.")

else:

print("PyTorch was NOT built with CUDA support.")

torch.backends.cuda.is_built() returns False

- Troubleshooting

- Verify CUDA Installation

Double-check that you have a compatible NVIDIA GPU, the correct NVIDIA driver, and the CUDA Toolkit installed on your system. The CUDA Toolkit version should be compatible with your PyTorch version (check PyTorch’s website for compatibility information). - Reinstall PyTorch (with CUDA)

The most common solution is to reinstall PyTorch, ensuring you select the correct CUDA version during installation. If you’re usingconda, use the appropriate CUDA specifier (e.g.,conda install pytorch torchvision torchaudio cudatoolkit=11.8 -c pytorch -c nvidia). If usingpip, you’ll likely need to find the correct wheel with CUDA support. Look at the PyTorch website for installation instructions. - Check Environment Variables

Ensure that the CUDA environment variables (likePATHandLD_LIBRARY_PATH) are set correctly. This is particularly important on Linux systems. - Incorrect Installation Method

If you built PyTorch from source, review the build instructions carefully. You need to have CUDA properly configured during the build process. - Conflicting Installations

Sometimes, multiple CUDA installations can interfere. Try cleaning up old CUDA installations before reinstalling.

- Verify CUDA Installation

- Problem

This indicates your PyTorch installation doesn’t have CUDA support. You’ll get errors if you try to use GPUs.

torch.backends.cuda.is_built() returns True, but torch.cuda.is_available() returns False

- Troubleshooting

- Driver Issues

The most common cause is an issue with the NVIDIA driver. Try reinstalling or updating your NVIDIA driver. Ensure the driver version is compatible with your CUDA Toolkit version. - GPU Not Detected

Check if your GPU is being recognized by your system. On Linux, uselspci | grep NVIDIA. On Windows, check the Device Manager. - CUDA Toolkit Path

Ensure that the CUDA Toolkit’s path is correctly configured in your environment variables. - Multiple CUDA Installations

Again, multiple CUDA installations can cause conflicts. Try to clean up any redundant CUDA installations. - Virtual Environments

If you’re using virtual environments, ensure that the CUDA drivers and toolkit are accessible within the environment. - Insufficient Resources

In rare cases, the GPU might be in use by another process or have insufficient resources.

- Driver Issues

- Problem

PyTorch thinks it was built with CUDA, but it can’t actually find or use a CUDA-enabled GPU.

Errors during GPU usage even if both functions return True

- Troubleshooting

- Out of Memory

Reduce batch size, use gradient accumulation, or try model parallelism to reduce memory usage. - CUDA Errors

Check the specific error message for clues. It might indicate issues with kernel launches, memory access, or other CUDA-related problems. Ensure your code is correctly using CUDA tensors (e.g.,tensor.cuda()). - Code Issues

Double-check your code for any errors related to GPU usage. Make sure you’re moving data and models to the GPU correctly.

- Out of Memory

- Problem

You get CUDA-related errors (e.g., out of memory, runtime errors) when you try to run code on the GPU.

- Simplify

Create a minimal reproducible example to isolate the problem. This will make it easier to debug. - Search Online Forums

Search for similar issues on forums like Stack Overflow. You might find solutions that are specific to your situation. - Consult PyTorch Documentation

The official PyTorch documentation is your best friend. Look for troubleshooting guides and CUDA-specific instructions. - Check PyTorch Version

Make sure your PyTorch version is up-to-date. Newer versions often have better CUDA support and bug fixes.

Basic CUDA Check and Device Selection

import torch

if torch.backends.cuda.is_built():

print("PyTorch was built with CUDA support!")

if torch.cuda.is_available():

print("CUDA is available on this machine!")

device = torch.device("cuda") # Use the first available GPU

# Or, specify a GPU index if you have multiple:

# device = torch.device("cuda:1") # Use the second GPU (index 1)

# Example usage:

x = torch.tensor([1, 2, 3]).to(device) # Move tensor to GPU

print(f"Tensor on device: {x}")

model = torch.nn.Linear(3, 4).to(device) # Move model to GPU

print(f"Model on device: {next(model.parameters()).device}")

else:

print("CUDA is built in, but no CUDA GPUs are available.")

device = torch.device("cpu") # Fallback to CPU

else:

print("PyTorch was NOT built with CUDA support. Using CPU.")

device = torch.device("cpu")

# ... rest of your code ...

# Now use the 'device' variable to place your tensors and models:

# model = MyModel().to(device)

# data = data.to(device)

# outputs = model(data)

- If CUDA is not available, the code falls back to using the CPU. This ensures your code can still run, albeit slower.

- The example shows how to move a tensor and a model to the selected device.

- The

devicevariable is then set to either «cuda» (if available) or «cpu». This is crucial because you’ll use thisdevicevariable throughout your code to place tensors and models on the correct hardware. - If CUDA is built, it then checks if a CUDA-enabled GPU is actually available using

torch.cuda.is_available(). - This code first checks if PyTorch was built with CUDA support using

torch.backends.cuda.is_built().

Handling Multiple GPUs

import torch

if torch.backends.cuda.is_built() and torch.cuda.is_available():

num_gpus = torch.cuda.device_count()

print(f"Found {num_gpus} CUDA GPUs.")

if num_gpus > 1:

# Use DataParallel for multi-GPU training (simple but less flexible):

# model = torch.nn.DataParallel(MyModel()) # Wrap your model

# model.to(device) # Move to the default device (all GPUs)

# More flexible approach:

devices = [torch.device(f"cuda:{i}") for i in range(num_gpus)]

print(f"Using devices: {devices}")

# Example: Distribute data across GPUs (simplified)

# Assuming 'data' is a list of tensors:

# data_chunks = torch.chunk(data, num_gpus)

# for i, chunk in enumerate(data_chunks):

# chunk = chunk.to(devices[i])

# # ... process data on each GPU ...

else:

device = torch.device("cuda:0") # Use single GPU

model = MyModel().to(device)

elif torch.backends.cuda.is_built():

print("CUDA built, but no GPUs available. Using CPU.")

device = torch.device("cpu")

else:

print("CUDA not built. Using CPU.")

device = torch.device("cpu")

- The example illustrates (in a simplified way) how you might distribute data across multiple GPUs. In a real training loop, you’d likely use a

DataLoaderwith appropriate samplers for more efficient data distribution. - It shows how to use

torch.nn.DataParallel(a simple way to parallelize your model across GPUs, but less flexible) or how to create a list of device objects for more fine-grained control. - It gets the number of available GPUs using

torch.cuda.device_count(). - This example demonstrates how to handle multiple GPUs.

- Comments

The code is well-commented to explain each step. - Multi-GPU Handling

The second example shows how to work with multiple GPUs, a crucial aspect of efficient deep learning. - Fallback to CPU

The code gracefully handles cases where CUDA is not available, ensuring your program can still run on the CPU. - Clear Device Selection

The code explicitly sets thedevicevariable, making it easy to manage whether you’re using a GPU or CPU.

Combining Checks and Simplifying

Instead of separate checks, you can combine them:

import torch

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

if device.type == "cuda":

print(f"Using CUDA device: {torch.cuda.get_device_name(device)}") # Get GPU name

# ... any CUDA-specific setup ...

elif device.type == "cpu":

print("Using CPU.")

# ... any CPU-specific setup ...

# Now use the 'device' variable:

model = MyModel().to(device)

data = data.to(device)

outputs = model(data)

This is the most common and recommended approach. It’s concise and directly sets the device based on CUDA availability. torch.cuda.is_available() implicitly checks if CUDA is built and if a CUDA device is usable.

try-except Block (Less Common, but Useful for Specific Errors)

You can use a try-except block to catch potential CUDA-related errors:

import torch

try:

device = torch.device("cuda") # Try to use CUDA

# Attempt CUDA operations here (e.g., creating a tensor on CUDA)

torch.randn(1, device=device) # Test if CUDA is truly working

print(f"Using CUDA device: {torch.cuda.get_device_name(device)}")

except RuntimeError as e: # Catch specific CUDA errors

print(f"CUDA error: {e}")

device = torch.device("cpu")

print("Falling back to CPU.")

model = MyModel().to(device)

# ... rest of your code ...

This is useful if you want to handle specific CUDA errors (like «out of memory») differently. However, the first method (combining checks) is generally preferred for simple availability checks.

Environment Variables (For Advanced Control)

You can use environment variables to control device selection:

import torch

import os

# Set CUDA_VISIBLE_DEVICES to choose specific GPUs (e.g., "0" for the first GPU)

# or leave it unset to use all available GPUs.

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

# ... rest of the code as before ...

This is useful for more advanced scenarios, such as distributed training or when you need to control which GPUs are visible to your process.

No Direct Alternative for is_built() (But Usually Not Needed)

There isn’t a direct replacement for torch.backends.cuda.is_built() if you’re already using torch.cuda.is_available(). is_available() implicitly checks if CUDA is built. So, in most cases, you don’t need is_built() separately.

- Prefer torch.device objects

Always usetorch.deviceobjects to represent your desired device (e.g.,device = torch.device("cuda:0"),device = torch.device("cpu")). This makes your code more readable and maintainable. - Consider environment variables

For more advanced control over GPU selection, use theCUDA_VISIBLE_DEVICESenvironment variable. - Handle potential errors

While the combined check is usually sufficient, consider usingtry-exceptblocks if you need to handle specific CUDA errors. - Use torch.cuda.get_device_name(device)

To print the name of the selected GPU, which is helpful for debugging and logging. - Avoid redundant checks

If you’re usingtorch.cuda.is_available(), you don’t needtorch.backends.cuda.is_built()in most cases. - Use the combined check

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")is the most straightforward and recommended way to handle device selection.

The “torch.cuda.is_available() returns False” is one of the most annoying errors to come across, especially when using Docker. At Bobcares, with our Docker Hosting Support Service, we can handle your Docker issues.

Fixing The “torch.cuda.is_available() returns False” Error

We must follow the below steps in order to fix the error:

1. Install the “CUDA Version” by running nvidia-smi. We should see something similar to this:

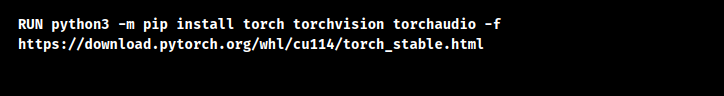

2. Pull Docker images for nvidia/cuda with tags matching the “CUDA Version” from above. The versions of the nvidia/cuda image may be lower than that of CUDA installed on the host, but not higher. If we use an image other than one provided by nvidia/cuda, we are responsible for installing dependencies, etc.

3. Install a PyTorch version that corresponds to the CUDA version installed on the host in the Docker container.

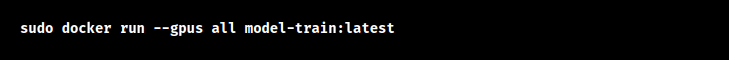

4. With Docker Run, use the —gpus flag as follows:

5. Run the following code to see if a container can see the GPU as well:

That code should produce the same results within the container and on the host.

[Looking for a solution to another query? We are just a click away.]

Conclusion

To sum up, the article explains about the method from our Tech team to fix the “torch.cuda.is_available() returns False” error in 5 steps.

PREVENT YOUR SERVER FROM CRASHING!

Never again lose customers to poor server speed! Let us help you.

Our server experts will monitor & maintain your server 24/7 so that it remains lightning fast and secure.

GET STARTED

Spend time on your business, not on your servers.

Managing a server is time consuming. Whether you are an expert or a newbie, that is time you could use to focus on your product or service. Leave your server management to us, and use that time to focus on the growth and success of your business.

TALK TO US

Or click here to learn more.