С помощью PowerShell вы можете определить точный размер определенного каталога в Windows (с учетом всех вложенных директорий). Это позволит узнать размер каталог без использования сторонних утилит, таких как TreeSize или WinDirStat. Кроме того, PowerShell позволяет более гибко отфильтровать файлы по типам или размерам, которые нужно учитывать при расчете размера папки.

Для получения размеров файлов в каталогах используются следующие командлеты PowerShell:

- Get-ChildItem (алиас

gci

) — позволяет получить список файлов в директории (включая вложенные объекты) - Measure-Object (алиас

measure

) – складывает размеры всех файлов для получения суммарного размера директории

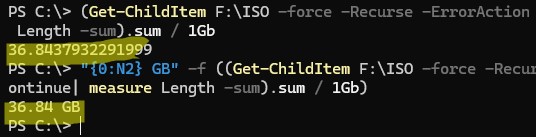

Например, чтобы получить полный размер директории F:\ISO в Гб, выполните команду:

(Get-ChildItem F:\ISO -force -Recurse -ErrorAction SilentlyContinue| measure Length -sum).sum / 1Gb

Рассмотрим используемые параметры:

-

-Force

— учитывать скрытые и системные файлы -

-Recure

— получать список файлов во вложенных директориях -

-ErrorAction SilentlyContinue

– игонрировать объекты, к которым у текущего пользователя нет доступа -

-measure Length -sum

– сложить размеры всех файлов (свойство Length) -

.sum/ 1Gb

– вывести только суммарный размер в Гб

В данном примере размер директории около 37 Гб (без учета NTFS сжатия). Чтобы округлить результаты до двух символов после запятой, выполните команду:

"{0:N2} GB" -f ((Get-ChildItem F:\ISO -force -Recurse -ErrorAction SilentlyContinue| measure Length -sum).sum / 1Gb)

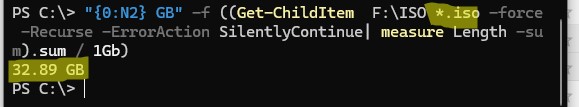

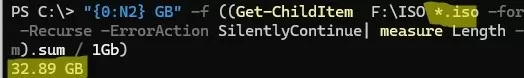

С помощью PowerShell можно получить суммарный размер всех файлов определенного типа в директории. Например, чтобы узнать сколько места занято ISO файлами, добавьте параметр

*.iso

:

"{0:N2} GB" -f ((Get-ChildItem F:\ISO *.iso -force -Recurse -ErrorAction SilentlyContinue| measure Length -sum).sum / 1Gb)

Можно использовать другие фильтры для выбора файлов, которые учитываются при расчете размера директории. Например, вы можете получить размер файлов, созданных за 2023 год

(gci -force f:\iso –Recurse -ErrorAction SilentlyContinue | ? {$_.CreationTime -gt ‘1/1/23’ -AND $_.CreationTime -lt ‘12/31/23’}| measure Length -sum).sum / 1Gb

Указанная выше PowerShell команда при подсчете размера файлов в каталоге будет выводить неверные данные, если в директории есть символические или жесткие ссылки. Например, в каталоге C:\Windows находится много жестких ссылок на файлы в хранилище компонентов WinSxS. В результате такие файлы могут быть посчитаны несколько раз. Чтобы не учитывать в результатах жесткие ссылки, используйте следующую команду (выполняется довольно долго):

"{0:N2} GB" -f ((gci –force C:\windows –Recurse -ErrorAction SilentlyContinue | Where-Object { $_.LinkType -notmatch "HardLink" }| measure Length -s).sum / 1Gb)

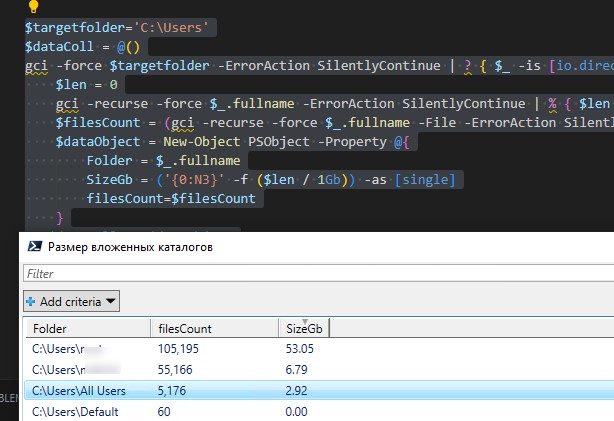

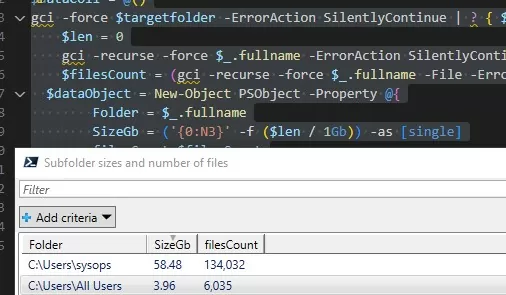

Вывести размер всех вложенных папок первого уровня в директории и количество файлов в каждой из них (в этом примере скрипт выведет размер всех профилей пользователей в C:\Users):

$targetfolder='C:\Users'

$dataColl = @()

gci -force $targetfolder -ErrorAction SilentlyContinue | ? { $_ -is [io.directoryinfo] } | % {

$len = 0

gci -recurse -force $_.fullname -ErrorAction SilentlyContinue | % { $len += $_.length }

$filesCount = (gci -recurse -force $_.fullname -File -ErrorAction SilentlyContinue | Measure-Object).Count

$dataObject = New-Object PSObject -Property @{

Folder = $_.fullname

SizeGb = ('{0:N3}' -f ($len / 1Gb)) -as [single]

filesCount=$filesCount

}

$dataColl += $dataObject

}

$dataColl | Out-GridView -Title "Размер вложенных каталогов и количество файлов"

% — это алиас для цикла foreach-object.

Скрипт выведет графическую таблицу Out-GridView со списком директорий, их размеров и количество файлов в каждой из них. Вы можете отсортировать каталоги в таблице по их размеру. Вы также можете выгрузить результаты в CSV (

|Export-Csv file.csv

) или Excel файл.

You can use PowerShell to calculate the exact size of a specific folder in Windows (recursively, including all subfolders). This way you can quickly find out the size of the directory on disk without using third-party tools such as TreeSize or WinDirStat. In addition, PowerShell gives you more flexibility to filter or exclude files (by type, size, or date) that you need to consider when calculating folder size.

Use the following PowerShell cmdlets to calculate the size of a folder:

- Get-ChildItem (

gcialias) — gets a list of files (with sizes) in a directory (including nested subfolders). Previously, we showed you how to use the Get-ChildItem cmdlet to find the largest files on the disk. - Measure-Object (

measurealias) – sums the sizes of all files to get the total directory size.

For example, to find the size of the D:\ISO directory in GB, run:

(Get-ChildItem D:\ISO -force -Recurse -ErrorAction SilentlyContinue| measure Length -sum).sum / 1Gb

Parameters used:

-Force– include hidden and system files-Recure– get a list of files in subfolders-ErrorAction SilentlyContinue– ignore files and folders the current user is not allowed to access-measure Length -sum– a sum of all file sizes (the Length property).sum/ 1Gb– show total size in GB

In this example, the directory size is about 37 GB (this PowerShell command ignores NTFS file system compression if it is enabled).

To round the results to two decimal places, use the command:

"{0:N2} GB" -f ((Get-ChildItem D:\ISO -force -Recurse -ErrorAction SilentlyContinue| measure Length -sum).sum / 1Gb)

PowerShell can find the total size of all files of a particular type in a directory. For example, add the *.iso parameter to find out how much space is taken up by ISO files:

"{0:N2} GB" -f ((Get-ChildItem D:\ISO *.iso -force -Recurse -ErrorAction SilentlyContinue| measure Length -sum).sum / 1Gb)

You can use other filters to select files to be included in the directory size calculation. For example, to see the size of files in the directory created in 2024:

(gci -force D:\ISO –Recurse -ErrorAction SilentlyContinue | ? {$_.CreationTime -gt '1/1/24’ -AND $_.CreationTime -lt '12/31/24'}| measure Length -sum).sum / 1Gb

If there are symbolic or hard links in the directory, the above PowerShell cmdlet displays an incorrect folder size. For example, the C:\Windows directory contains many hard links to files in the WinSxS folder (Windows Component Store). Such files may be counted several times. Use the following command to ignore hard links:

"{0:N2} GB" -f ((gci –force C:\Windows –Recurse -ErrorAction SilentlyContinue | Where-Object { $_.LinkType -notmatch "HardLink" }| measure Length -s).sum / 1Gb)

Get the sizes of all top-level subfolders in the destination folder and the number of files in each subfolder (in this example, the PowerShell script displays the size of all user profiles in C:\Users):

$targetfolder='C:\Users'

$dataColl = @()

gci -force $targetfolder -ErrorAction SilentlyContinue | ? { $_ -is [io.directoryinfo] } | % {

$len = 0

gci -recurse -force $_.fullname -ErrorAction SilentlyContinue | % { $len += $_.length }

$filesCount = (gci -recurse -force $_.fullname -File -ErrorAction SilentlyContinue | Measure-Object).Count

$dataObject = New-Object PSObject -Property @{

Folder = $_.fullname

SizeGb = ('{0:N3}' -f ($len / 1Gb)) -as [single]

filesCount=$filesCount

}

$dataColl += $dataObject

}

$dataColl | Out-GridView -Title "Subfolder sizes and number of files"

% is an alias for the foreach-object loop.

The script will display the Out-GridView graphical table listing the directories, their sizes, and how many files they contain. The folders in the table can be sorted by size or number of files in the Out-GridView form. You can also export the results to a CSV (| Export-Csv folder_size.csv) or to an Excel file.

Start managing disk space usage today.

Just click the button below to download, then install on any supported computer.

FREE BASIC EDITION!

FOLDERSIZES SUPPORTED PLATFORMS

FolderSizes supports all 64-bit Windows operating systems, delivering world-class disk space

analysis tooling across the entire Windows client and server ecosystem.

Runs on Windows 11, 10, 8, 7 (SP1) and Windows Server 2025, 2022, 2019, 2016, 2012 R2

Instructions: Download FolderSizes using the Start Download button above, then run the installer.

Installation Notes

The FolderSizes installer requires administrative privileges to properly install all components.

After installation, FolderSizes can be run with standard user permissions for most operations,

though administrative rights may be needed to scan certain protected system folders.

During installation, you’ll have the option to integrate FolderSizes with Windows Explorer,

enabling right-click context menu access to powerful disk space analysis features directly from your file browser.

BUILT FOR 64 BIT

FolderSizes v9.5 requires 64-bit hardware and a 64-bit Windows OS.

For 32-bit support, you can download v9.3.

Here is a PowerShell function helpful in populating statistics of sub-folder sizes.

- Create a PS1 file named Get-FolderSize.ps1 with the following contents:

Function Get-FolderSize

{

BEGIN{$fso = New-Object -comobject Scripting.FileSystemObject}

PROCESS

{

$path = $input.fullname

$folder = $fso.GetFolder($path)

$size = $folder.size

[PSCustomObject]@{‘Name’ = $path;’Size’ = ($size / 1gb) }

}

}

2. Launch Powershell and import the new PS1 file we create using CMDLET

Import-Module Get-FolderSize.ps1

3. Traverse into the parent folder you wish to query using the CD command.

4. Execute CMDLET

Get-ChildItem -Directory -EA 0 | Get-FolderSize | sort size -Descending

You can now manually consolidate output into an Excel spreadsheet and even calculate totals/average.

-

Windows

Maximizing Storage Efficiency: Using PowerShell for Folder Size Analysis

-

Team Ninja -

by Team Ninjareviewed by Stan Hunter, Technical Marketing Engineer

-

Last updated March 6, 2024

Key Takeaways

- The PowerShell script efficiently calculates and reports folder sizes in Windows environments.

- It is adaptable, allowing users to specify a path, folder depth, and minimum size threshold.

- The script supports a range of size units (KB, MB, GB, etc.) for defining minimum folder size.

- Ideal for IT professionals and MSPs needing a quick overview of disk space usage.

- Includes features for handling permission issues and providing more accurate results.

- Employs a user-friendly output, displaying folder paths and their corresponding sizes.

- Useful in scenarios like server maintenance, storage optimization, and data cleanup.

- Offers a more script-based, customizable alternative to traditional disk analysis tools.

Efficient data management is a cornerstone of IT operations, where insights into data distribution and storage play a crucial role. PowerShell, with its versatile scripting capabilities, stands as a potent tool for IT professionals. A script that can list and measure folder sizes is not just a convenience—it’s a necessity for maintaining optimal performance and storage management in various IT environments.

Background

The provided PowerShell script targets an essential need in the IT sector: understanding and managing folder sizes within a system. For IT professionals and Managed Service Providers (MSPs), this is more than a matter of simple housekeeping. In an era where data grows exponentially, keeping tabs on which folders are consuming the most space can lead to more informed decisions about resource allocation, system optimization, and data management policies. This script specifically caters to these needs by enabling a detailed analysis of folder sizes.

The Script:

#Requires -Version 5.1

<#

.SYNOPSIS

Get a tree list of folder sizes for a given path with folders that meet a minimum folder size.

.DESCRIPTION

Get a tree list of folder sizes for a given path with folders that meet a minimum folder size.

Be default this looks at C:, with a folder depth of 3, and filters out any folder under 500 MB.

.EXAMPLE

(No Parameters)

Gets folder sizes under C: for a depth of 3 folders and displays folder larger than 500 MB.

.EXAMPLE

-Path C:

-Path C: -MinSize 1GB

-Path C:Users -Depth 4

PARAMETER: -Path C:

Gets folder sizes under C:.

PARAMETER: -Path C: -MinSize 1GB

Gets folder sizes under C:, but only returns folder larger than 1 GB.

Don't use quotes around 1GB as PowerShell won't be able to expand it to 1073741824.

PARAMETER: -Path C:Users -Depth 4

Gets folder sizes under C:Users with a depth of 4.

.OUTPUTS

String[] or PSCustomObject[]

.NOTES

Minimum OS Architecture Supported: Windows 10, Windows Server 2016

Release Notes: Renamed script and added Script Variable support

By using this script, you indicate your acceptance of the following legal terms as well as our Terms of Use at https://www.ninjaone.com/terms-of-use.

Ownership Rights: NinjaOne owns and will continue to own all right, title, and interest in and to the script (including the copyright). NinjaOne is giving you a limited license to use the script in accordance with these legal terms.

Use Limitation: You may only use the script for your legitimate personal or internal business purposes, and you may not share the script with another party.

Republication Prohibition: Under no circumstances are you permitted to re-publish the script in any script library or website belonging to or under the control of any other software provider.

Warranty Disclaimer: The script is provided “as is” and “as available”, without warranty of any kind. NinjaOne makes no promise or guarantee that the script will be free from defects or that it will meet your specific needs or expectations.

Assumption of Risk: Your use of the script is at your own risk. You acknowledge that there are certain inherent risks in using the script, and you understand and assume each of those risks.

Waiver and Release: You will not hold NinjaOne responsible for any adverse or unintended consequences resulting from your use of the script, and you waive any legal or equitable rights or remedies you may have against NinjaOne relating to your use of the script.

EULA: If you are a NinjaOne customer, your use of the script is subject to the End User License Agreement applicable to you (EULA).

#>

[CmdletBinding()]

param (

[String]$Path = "C:",

[int]$Depth = 3,

$MinSize = 500MB

)

begin {

function Get-Size {

param ([string]$String)

switch -wildcard ($String) {

'*PB' { [int64]$($String -replace '[^d+]+') * 1PB; break }

'*TB' { [int64]$($String -replace '[^d+]+') * 1TB; break }

'*GB' { [int64]$($String -replace '[^d+]+') * 1GB; break }

'*MB' { [int64]$($String -replace '[^d+]+') * 1MB; break }

'*KB' { [int64]$($String -replace '[^d+]+') * 1KB; break }

'*B' { [int64]$($String -replace '[^d+]+') * 1; break }

'*Bytes' { [int64]$($String -replace '[^d+]+') * 1; break }

Default { [int64]$($String -replace '[^d+]+') * 1 }

}

}

$Path = if ($env:rootPath) { Get-Item -Path $env:rootPath }else { Get-Item -Path $Path }

if ($env:Depth) { $Depth = [System.Convert]::ToInt32($env:Depth) }

$MinSize = if ($env:MinSize) { Get-Size $env:MinSize }else { Get-Size $MinSize }

function Test-IsElevated {

$id = [System.Security.Principal.WindowsIdentity]::GetCurrent()

$p = New-Object System.Security.Principal.WindowsPrincipal($id)

$p.IsInRole([System.Security.Principal.WindowsBuiltInRole]::Administrator)

}

function Test-IsSystem {

$id = [System.Security.Principal.WindowsIdentity]::GetCurrent()

return $id.Name -like "NT AUTHORITY*" -or $id.IsSystem

}

if (!(Test-IsElevated) -and !(Test-IsSystem)) {

Write-Host "[Warning] Not running as SYSTEM account, results might be slightly inaccurate."

}

function Get-FriendlySize {

param($Bytes)

# Converts Bytes to the highest matching unit

$Sizes = 'Bytes,KB,MB,GB,TB,PB,EB,ZB' -split ','

for ($i = 0; ($Bytes -ge 1kb) -and ($i -lt $Sizes.Count); $i++) { $Bytes /= 1kb }

$N = 2

if ($i -eq 0) { $N = 0 }

if ($Bytes) { "{0:N$($N)} {1}" -f $Bytes, $Sizes[$i] }else { "0 B" }

}

function Get-SizeInfo {

param(

[parameter(mandatory = $true, position = 0)][string]$TargetFolder,

#defines the depth to which individual folder data is provided

[parameter(mandatory = $true, position = 1)][int]$DepthLimit

)

$obj = New-Object PSObject -Property @{Name = $targetFolder; Size = 0; Subs = @() }

# Are we at the depth limit? Then just do a recursive Get-ChildItem

if ($DepthLimit -eq 1) {

$obj.Size = (Get-ChildItem $targetFolder -Recurse -Force -File -ErrorAction SilentlyContinue | Measure-Object -Sum -Property Length).Sum

return $obj

}

# We are not at the depth limit, keep recursing

$obj.Subs = foreach ($S in Get-ChildItem $targetFolder -Force -ErrorAction SilentlyContinue) {

if ($S.PSIsContainer) {

$tmp = Get-SizeInfo $S.FullName ($DepthLimit - 1)

$obj.Size += $tmp.Size

Write-Output $tmp

}

else {

$obj.Size += $S.length

}

}

return $obj

}

function Write-Results {

param(

[parameter(mandatory = $true, position = 0)]$Data,

[parameter(mandatory = $true, position = 1)][int]$IndentDepth,

[parameter(mandatory = $true, position = 2)][int]$MinSize

)

[PSCustomObject]@{

Path = "$((' ' * ($IndentDepth + 2)) + $Data.Name)"

Size = Get-FriendlySize -Bytes $Data.Size

IsLarger = $Data.Size -ge $MinSize

}

foreach ($S in $Data.Subs) {

Write-Results $S ($IndentDepth + 1) $MinSize

}

}

function Get-SubFolderSize {

[CmdletBinding()]

param(

[parameter(mandatory = $true, position = 0)]

[string]$targetFolder,

[int]$DepthLimit = 3,

[int]$MinSize = 500MB

)

if (-not (Test-Path $targetFolder)) {

Write-Error "The target [$targetFolder] does not exist"

exit

}

$Data = Get-SizeInfo $targetFolder $DepthLimit

#returning $data will provide a useful PS object rather than plain text

# return $Data

#generate a human friendly listing

Write-Results $Data 0 $MinSize

}

}

process {

Get-SubFolderSize -TargetFolder $Path -DepthLimit $Depth -MinSize $MinSize | Where-Object { $_.IsLarger } | Select-Object -Property Path, Size

}

end {

}

Access 300+ scripts in the NinjaOne Dojo

Get Access

Detailed Breakdown

The script functions as follows:

- Parameter Initialization: It begins by defining parameters like Path, Depth, and MinSize. These parameters allow users to specify the search directory, the depth of the directory tree to analyze, and the minimum folder size to report.

- Size Conversion Function (Get-Size): This function converts different size units (KB, MB, GB, etc.) into bytes, ensuring uniformity in size measurement.

- Environment Variable Checks: The script checks and adapts to environmental variables, if set, allowing for dynamic path, depth, and size configurations.

- Elevation and System Account Checks: It examines whether the script runs under elevated privileges or a system account, crucial for accessing certain directories and ensuring accuracy.

- Folder Size Calculation (Get-SizeInfo): This recursive function traverses the folder hierarchy, accumulating the size of files and folders up to the specified depth.

- Result Formatting (Write-Results): The gathered data is then formatted into a readable structure, showing the path and the size of folders that exceed the specified minimum size.

- Execution (Get-SubFolderSize): The core function that ties all components together, executing the size calculation and result formatting.

- Output: Finally, the script outputs the data, focusing on folders larger than the minimum size set by the user.

Potential Use Cases

Imagine an IT administrator at a company noticing that server storage is running low. Using this script, they can quickly identify large folders, particularly those that have grown unexpectedly. This analysis could reveal redundant data, unusually large log files, or areas where archiving could free up significant space.

Comparisons

Alternative methods include using third-party tools or manual checks. Third-party tools can be more user-friendly but might not offer the same level of customization. Manual checks, while straightforward, are time-consuming and impractical for large systems.

FAQs

- Q: Can this script analyze network drives?

A: Yes, provided the user has the necessary permissions and the drive is accessible. - Q: Does the script require administrative privileges?

A: While not always necessary, running the script as an administrator ensures comprehensive access to all folders. - Q: How can I modify the minimum folder size?

A: Change the MinSize parameter to your desired threshold.

Beyond storage management, the script’s output can have implications for IT security. Large, unexpected files could be a sign of security breaches, like data dumps. Regular monitoring using such scripts can be part of a proactive security strategy.

Recommendations

- Regularly run the script for proactive storage management.

- Combine the script’s output with other system monitoring tools for comprehensive insights.

- Be cautious about system load when running this script on servers with extensive directories.

Final Thoughts

In the context of data-driven solutions like NinjaOne, PowerShell scripts like these complement broader IT management strategies. By automating and simplifying complex tasks, such as analyzing folder sizes, IT professionals can focus on more strategic initiatives, ensuring systems are not just operational, but also optimized and secure. With NinjaOne’s integration capabilities, scripts like this can be part of a more extensive toolkit for efficient IT management.

-

System Configuration